Connecting the Dots Across AI Research

*This article is not a comprehensive review of each report, but a synthesis of recurring signals across them.

When you read 10+ major AI reports side by side — from tech giants, consultancies, and research institutions — patterns emerge that no single source reveals on its own. What I found wasn't just data about adoption rates or productivity gains. It was a consistent story about a threshold we've already crossed: AI is no longer experimental. The challenge now is operational — how to scale it, govern it, and make it durable. I'm not reviewing each report individually. Instead, I'm connecting the dots — looking at signals that repeat across multiple sources and what they mean for organizations trying to scale AI beyond experiments.

Early Movers Were Right

By the end of 2025, one thing had become obvious to anyone paying close attention: the teams that moved early on AI were right to do so. They didn’t wait for perfect frameworks or universal consensus. They experimented. They piloted copilots. They tested agents and AI co-pilots in real workflows. They learned faster than everyone else — and that matters. If there is a clear dividing line emerging as we move into 2026, it’s between organizations that treated AI as something to observe from a distance and those that were willing to work with it while it was still imperfect. The latter are ahead, and they should be.

Over the past month, I’ve been reading reports, surveys, and long-form analyses from consultancies, universities, and practitioners. And taken together, they tell a consistent story. AI adoption itself is no longer the hard part. The hard part now is what comes next. Not because AI is dangerous or out of control, but because once it becomes embedded into everyday work, it starts to behave like any other critical system: it needs structure. The early adopters didn’t fail — they reached the next level. They moved from can we do this? How do we run this well, sustainably, and securely?

When you step back and look at the numbers, the first reaction is not excitement. It’s disbelief.

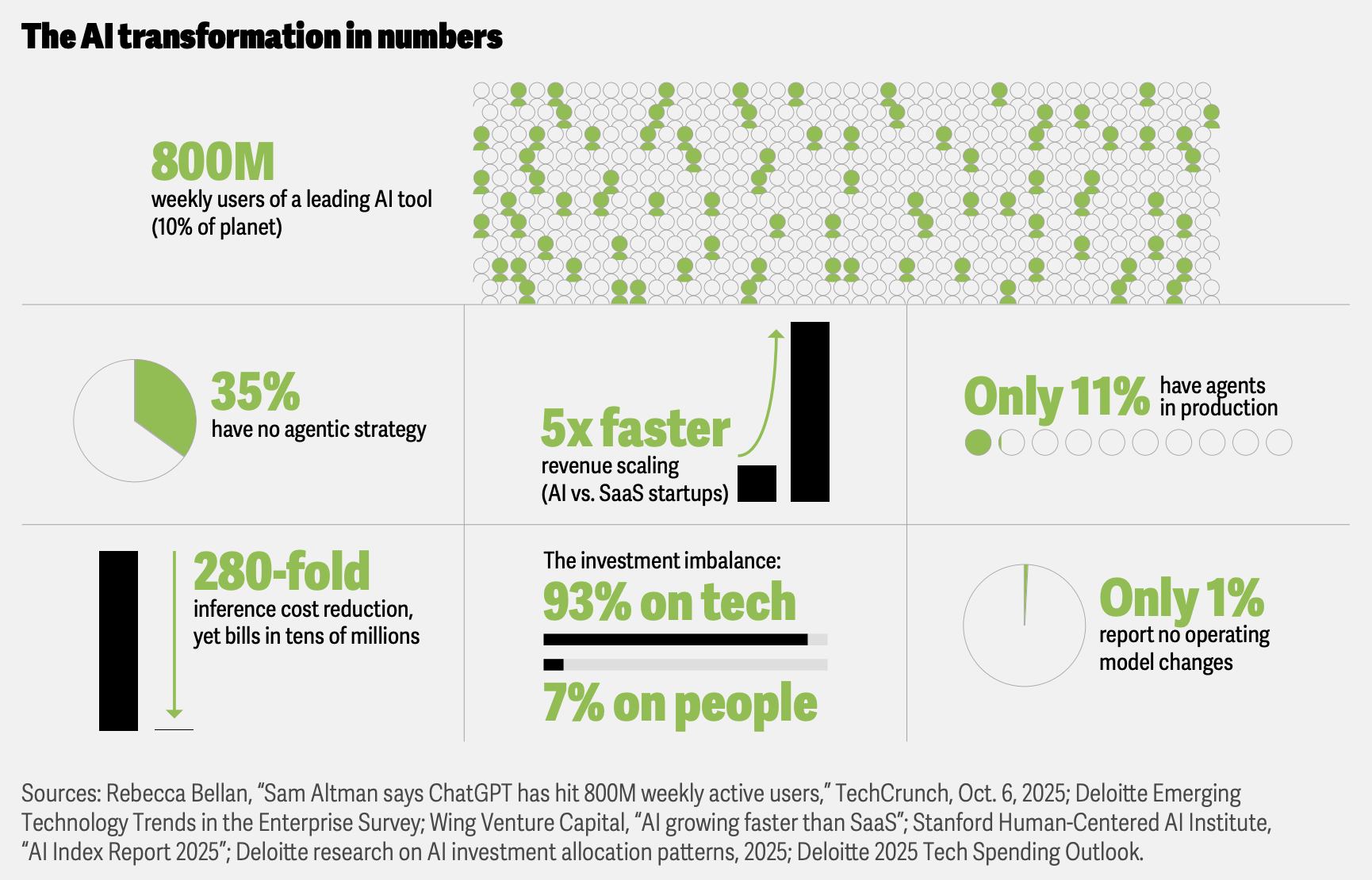

As estimated, eight hundred million people. Every week. Roughly ten percent of the planet now interacts with a single AI system (ChatGPT) as part of ordinary life. That figure alone should have ended the debate about whether AI is “early” or “experimental.” It hasn’t. Instead, it created a strange illusion of maturity — the sense that because AI is everywhere, it must already be under control. But scale does not equal readiness. And reach does not equal understanding. The sources behind these numbers — from TechCrunch’s reporting on ChatGPT’s growth to Stanford’s AI Index and Deloitte’s own enterprise surveys — all point to the same uncomfortable truth: adoption has outrun structure.

What many of these reports point to is not a crisis, but a transition. AI stopped being a standalone initiative and started becoming part of the fabric of organizations — inside documents, workflows, automations, and decision loops. That kind of presence changes the stakes. When intelligence operates at machine speed and touches sensitive data, security can’t be an afterthought or a separate function that checks boxes later. It has to exist alongside the work itself. Deloitte emphasizes that when it notes that security considerations must be built into foundational design, rather than layered on after deployment (DI Tech Trends 2026). This isn’t a warning. It’s a sign of maturity.

Industry analysts, including Wing Venture Capital, have observed that AI-native companies are achieving significantly faster growth trajectories than traditional SaaS businesses, fundamentally reshaping investor expectations and competitive dynamics. That kind of acceleration reshapes expectations overnight — from investors, from boards, from leadership teams who suddenly feel pressure not just to adopt AI, but to reorganize everything around it. And yet, when Deloitte surveyed enterprises in their 2026 Tech Trends report, the picture shifted. Only eleven percent of organizations have agentic AI systems running in production, with another 38% running pilots and 35% having no agentic strategy at all.

Looking across these reports — from Deloitte, Stanford, and Anthropic — is an encouraging shift in narrative. AI is no longer framed as something that needs to be restrained, but as something that needs to be designed responsibly. In fact, many organizations are already using AI to strengthen security — to prioritize risks, support compliance, and help teams make faster, better-informed decisions. The conversation has moved from whether AI belongs in core operations to how it should be governed once it’s there. That’s progress.

This way of thinking aligns closely with how we’ve approached BridgeApp from the beginning. We never believed AI should live across a fragmented set of tools, plugins, and disconnected services. Collaboration, automation, and intelligence work best when they exist inside a coherent system — one that organizations can see, shape, and control. That’s why on-premise deployment matters to us. Not as a selling point, but as a practical answer to a real question many teams are now asking: how do we scale AI while preserving security, compliance, and sovereignty?

When Trust Becomes the New Attack Surface

One of the quiet but defining shifts of the AI era is that cybersecurity is no longer only about systems, networks, or code. It’s about trust. Deloitte’s Tech Trends 2026 makes this clear when it describes how AI is reshaping the threat landscape not just technically, but socially — through deepfakes, synthetic identities, and AI-powered social engineering. These aren’t fringe scenarios or future hypotheticals. They’re already part of everyday operations, from phishing attempts that sound uncannily human to fake audio and video that can convincingly impersonate executives, partners, or even internal colleagues. As Deloitte notes, AI is accelerating external threats, but just as importantly, it’s changing how those threats work — exploiting speed, scale, and credibility rather than brute force (Deloitte Tech Trends 2026, Using AI in Cybersecurity).

What’s striking is that this isn’t framed as a loss of control, but as a call for clarity. Deepfakes don’t succeed because AI is too powerful; they succeed when systems lack strong identity, provenance, and governance. The answer isn’t to slow down AI adoption, but to raise the bar for how intelligence is deployed, authenticated, and overseen. Deloitte emphasizes that organizations are moving away from reactive security toward embedded, design-level safeguards — building visibility, access control, and accountability directly into AI-powered workflows. In this context, security becomes less about blocking innovation and more about making sure intelligence operates inside boundaries that humans actually understand and trust.

This is where architecture starts to matter more than tools. Organizations need systems where AI and collaboration exist together—not scattered across plugins and cloud services. That's the thinking behind BridgeApp's unified workspace approach.

That’s why BridgeApp was built to run on-premise inside an organization’s own infrastructure — unlike Slack or Teams — ensuring data sovereignty and compliance from day one. It’s also why BridgeApp is white-label and extensible by design, allowing enterprises to shape their own agents, workflows, and governance models rather than inheriting someone else’s defaults. In a world increasingly dominated by a handful of US tech giants, this approach offers something quieter but more durable: digital independence for European, Middle Eastern, and global organizations that want AI to serve their systems — not replace them.

As we look toward 2026, the signal is calm but unmistakable. The winners won’t be the teams that simply adopted AI first — they already did that work. The winners will be those who take the next step: turning experimentation into systems, speed into structure, and intelligence into something durable. This isn’t about slowing down innovation. It’s about making sure it lasts.

Digital Sovereignty Is Becoming an Infrastructure Decision

As organizations rethink where their AI runs and how deeply it’s woven into daily work, digital sovereignty stops being a political concept and becomes a practical one. It shows up not in strategy decks, but in architectural choices — quiet decisions about control, jurisdiction, and long-term independence. This perspective reflects broader infrastructure shifts highlighted in Deloitte Tech Trends 2026.

- Where intelligence lives. When AI continuously processes conversations, documents, and workflows, sovereignty is no longer just about data storage. It’s about where inference happens, who controls the models in production, and how visible those systems remain over time.

- Control without friction. Sovereign infrastructure doesn’t mean giving up modern usability. It means enabling teams to collaborate and automate freely while keeping sensitive operations inside environments they trust and govern themselves.

- Compliance by design. For governments, regulated industries, and global enterprises, sovereignty simplifies compliance. When AI and collaboration tools run on-premise or in private cloud environments, regulatory alignment becomes an architectural default rather than an ongoing negotiation.

- Independence from platform monopolies. As AI consolidates power among a small number of US cloud providers, sovereignty offers an alternative path — one where organizations aren’t forced to trade innovation speed for geopolitical or operational dependence.

This is the logic also behind the BridgeApp. Built as an intelligent workspace for digital sovereignty, BridgeApp brings communication, collaboration, and AI-powered automation into a single secure platform that can run fully on-premise or in a private cloud. It delivers the usability teams expect from modern tools, while preserving the control and trust required by organizations that choose independence over convenience.

Experts at Stanford’s Human-Centered AI institute predict that 2026 will be the year sovereignty moves from slogan to strategy. As Stanford HAI Co-Director James Landay put it, “AI sovereignty will gain huge steam this year as countries try to show their independence from AI providers and from the United States’ political system. In one model of sovereignty, a country might build its own large LLM. In another example, a country might run someone else’s LLM on their own GPUs so that they can make sure their data doesn’t leave their country”.

This isn’t abstract geopolitics. It’s practical. As intelligence becomes woven through business processes, the question of where data moves, where inference runs, and under whose laws it lives influences procurement decisions, compliance frameworks, and even competitive differentiation. Some organizations can’t afford to treat their AI infrastructure as someone else’s black box — not because of fear, but because they have to comply with data protection laws, governance standards, and industry regulations that simply don’t accommodate foreign cloud defaults. The Stanford insight reinforces a theme that’s been emerging across multiple reports: sovereignty isn’t a buzzword, it’s an infrastructure imperative.

When AI Stops Waiting for Prompts

The biggest change in AI isn’t how fluent it sounds — it’s how independently it behaves. Throughout 2025, AI systems quietly crossed a threshold from reactive tools to proactive actors. Forbes describes this transition as the move from generative to agentic AI, noting that these systems are designed not just to produce outputs, but to “act, reason, collaborate, and execute on their own” (Chuck Brooks, Forbes, 2025).

This shift changes how work gets done. Agentic AI doesn’t replace people — it changes their role. Instead of issuing every instruction, humans set direction, constraints, and priorities, while AI carries out sequences of actions across tools and workflows. The real challenge isn’t technological maturity; it’s organizational readiness. Autonomy forces teams to clarify ownership, accountability, and design — questions that were easier to avoid when AI only responded.

Two major 2025/2026 reports — Anthropic's "The 2026 State of AI Agents" and LangChain's "State of Agent Engineering" — show that agentic systems have moved decisively from pilots into production, backed by real usage data from organizations already deploying agents at scale:

More than half of organizations (57%) now deploy agents for multi-stage workflows, with 16% running cross-functional processes across multiple teams. In 2026, 81% plan to tackle more complex use cases, including 39% developing agents for multi-step processes and 29% deploying them for cross-functional projects.

Coding leads adoption. Nearly 90% of organizations use AI to assist with development, and 86% deploy agents for production code. Organizations report time savings across the entire development lifecycle: planning and ideation (58%), code generation (59%), documentation (59%), and code review and testing (59%).

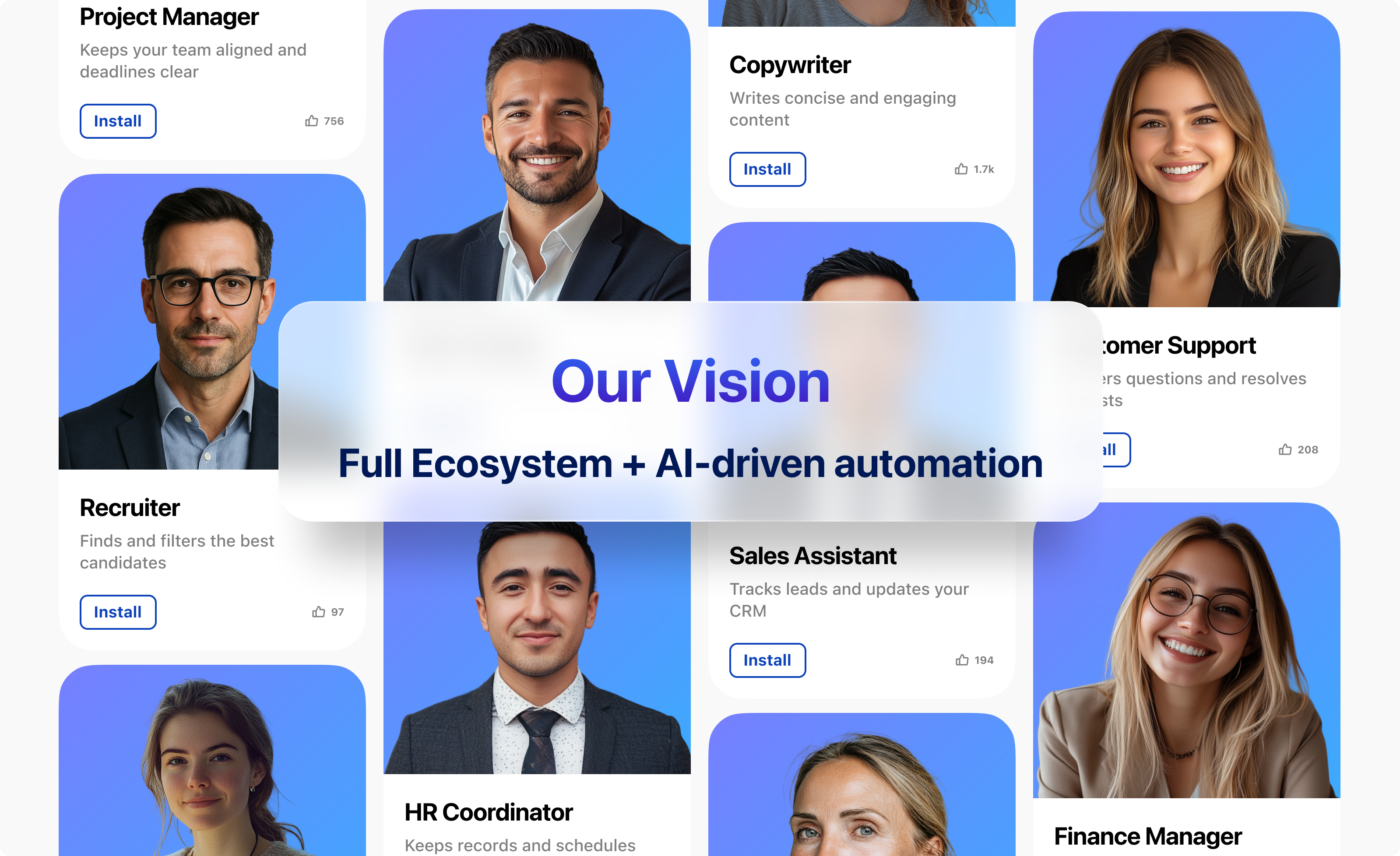

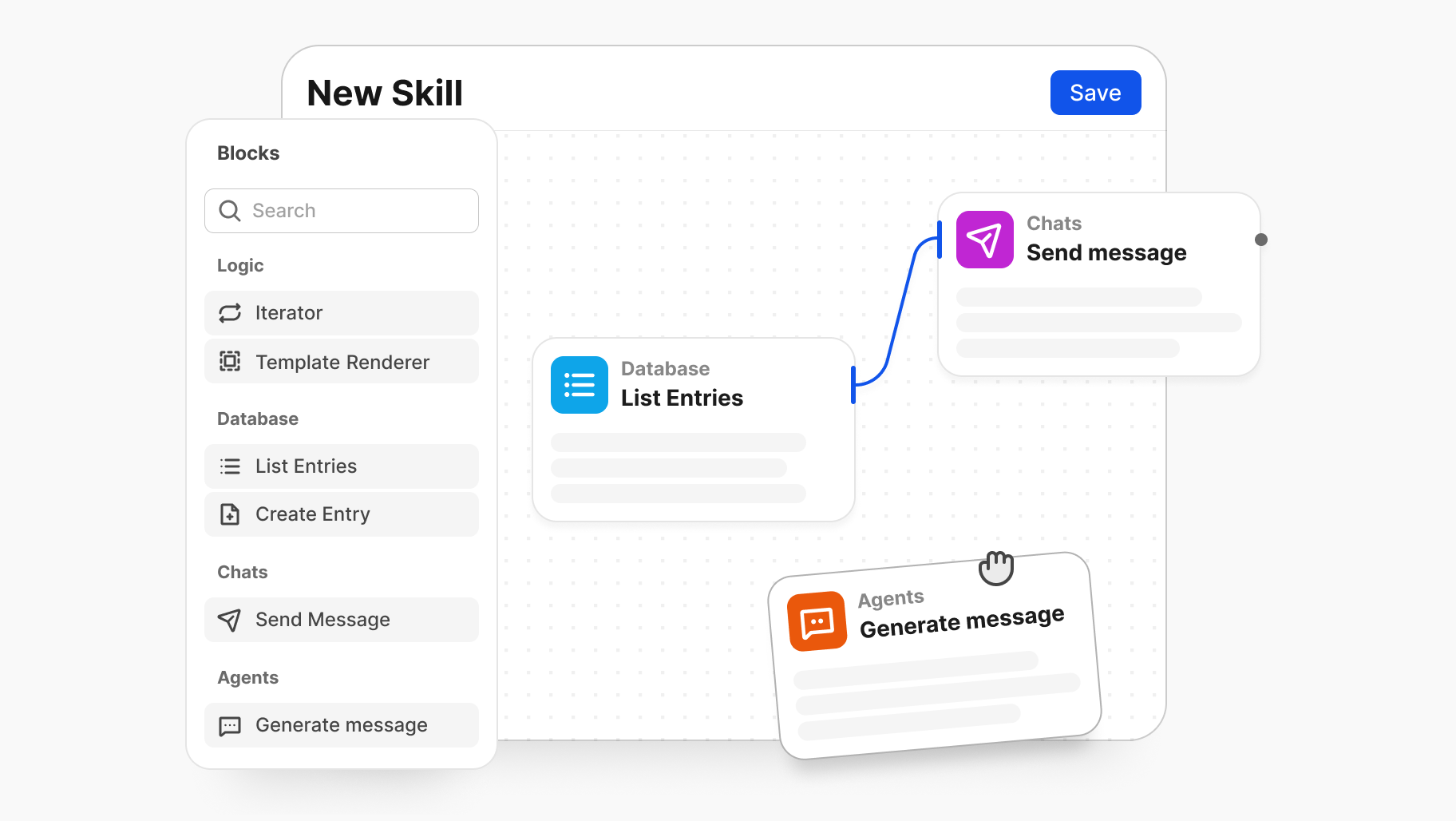

At BridgeApp, we approached AI agents as first-class participants in the workspace, not as add-ons. Agents can be fully customized — not just in what they do, but in how they think and communicate. You can build AI-powered assistants to amplify any role, from HR managers to analysts, with custom knowledge, communication style, and business logic that reflects company standards rather than generic defaults. These agents evolve alongside state-of-the-art LLMs, but remain grounded in your organization’s own context.

What gives these agents real leverage is access. BridgeApp agents can work with every entity inside the workspace — chat conversations, tasks, documents, databases, dashboards, and even other AI agents. This makes it possible to chain skills together into workflows that run end to end: an agent that monitors incoming requests, pulls relevant data, updates records, triggers follow-ups, and escalates only when human judgment is required. And when a specific capability doesn’t exist yet, it doesn’t become a blocker. Custom skills can be built to match exact processes, rather than forcing teams to adapt their work to someone else’s tooling.

At that point, the question naturally shifts from what can agents do to what do they change economically. This is where ROI enters the conversation — not as a promise, but as an outcome of targeting the right bottlenecks. As we outline in our own beginner’s guide to AI agents, returns vary widely by industry and deployment quality, but the pattern is consistent: when agents are applied to high-friction, process-heavy workflows, the impact compounds quickly. A detailed study published in August 2025 showed this clearly in mortgage processing. After introducing AI agents, ROI more than doubled, rising from 15% to 35%. Operational costs dropped by 60%, loan throughput increased at the same rate, and the average document review time fell from eight hours to just two — a 75% efficiency gain that translated directly into higher capacity and profitability.

AI Is Already Changing How Work Gets Done

One of the most grounded signals in the OpenAI State of Enterprise AI 2025 report isn’t about models or roadmaps. It’s about time. Across nearly one hundred enterprises, workers consistently report that AI is helping them produce higher-quality work faster — and in ways that feel tangible in daily operations, not theoretical. Seventy-five percent of surveyed employees say that using AI has improved either the speed or the quality of their output. For ChatGPT Enterprise users, that impact adds up quickly: on average, workers report saving between 40 and 60 minutes per active day, with data science, engineering, and communications roles saving even more — often 60 to 80 minutes a day. These aren’t marginal gains. They’re the kind of shifts that change how a workday feels.

The most revealing insight comes from where those gains appear. Accounting and finance teams report the largest time savings per interaction, followed closely by analytics, communications, and engineering. This suggests that AI isn’t just accelerating creative or exploratory tasks — it’s reshaping some of the most structured, rules-driven parts of enterprise work. And when those minutes are reclaimed at scale, the effects ripple outward.

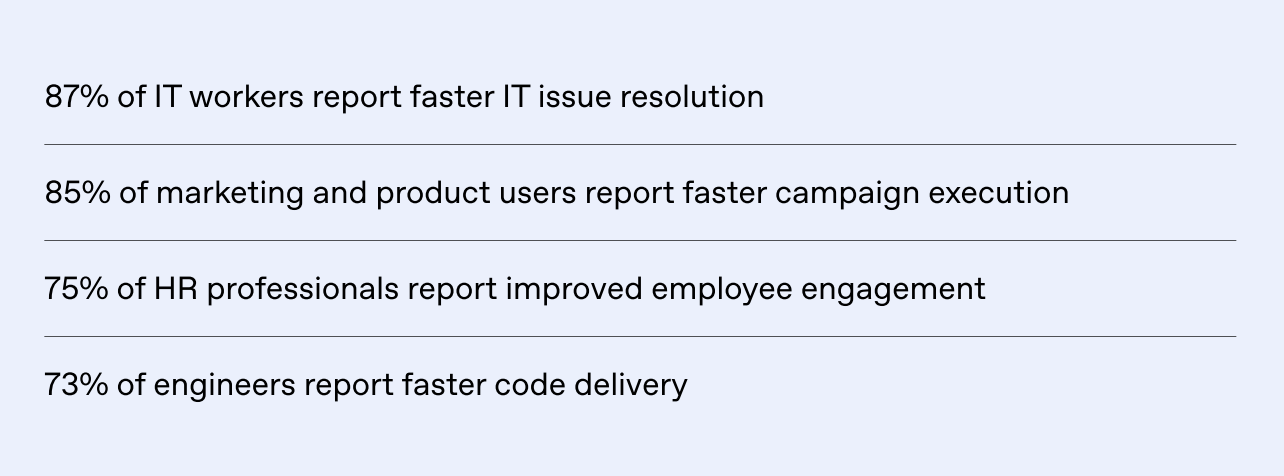

According to the same survey, 87% of IT workers report faster issue resolution, 85% of marketing and product teams report quicker campaign execution, 75% of HR professionals see improved employee engagement, and 73% of engineers deliver code faster. Productivity is part of the story, but not the whole of it. What’s really changing is who does certain kinds of work and how much human effort is still required to move things forward.

Not Everyone Uses AI the Same Way

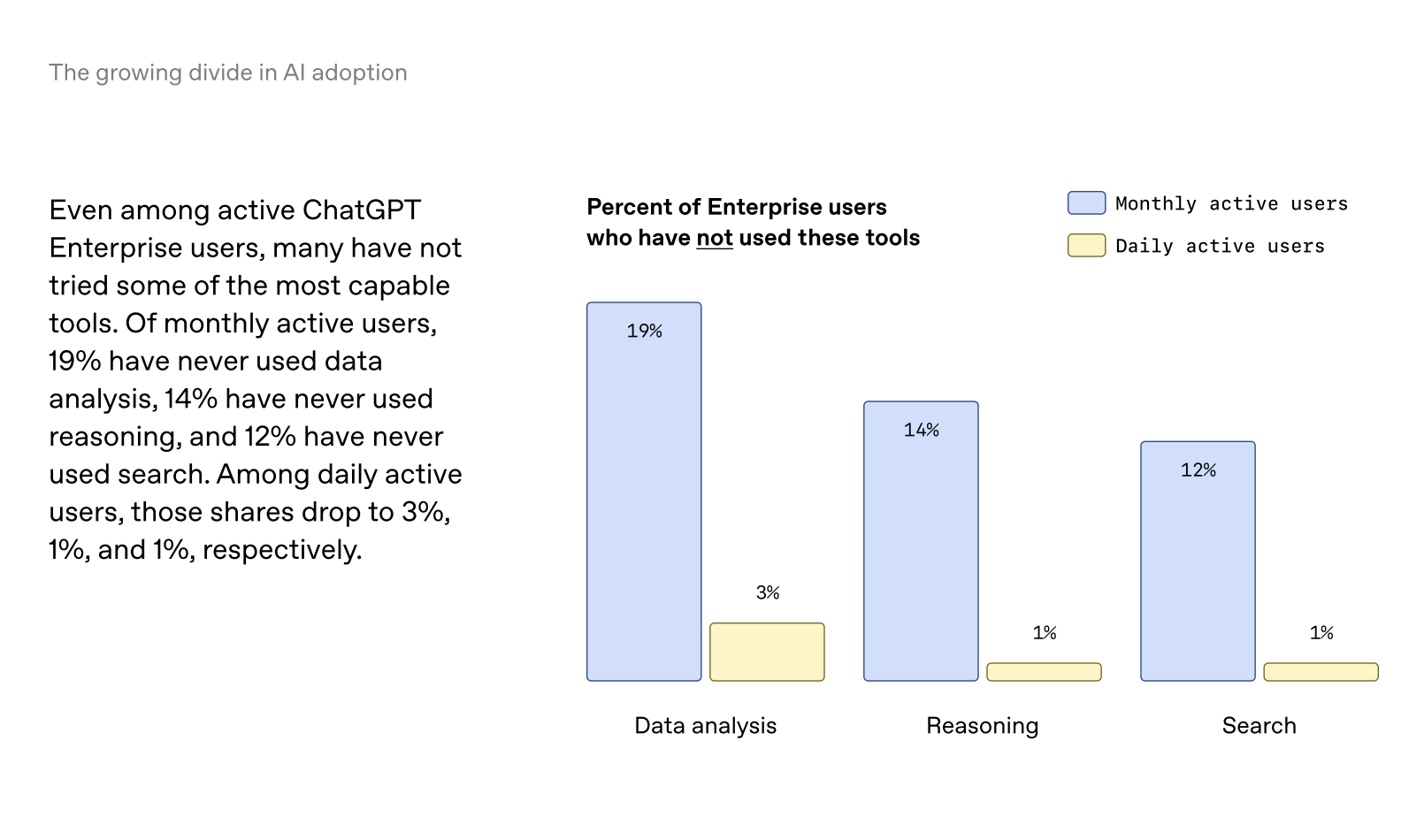

Enterprise AI adoption is uneven, even among early adopters. One of the most revealing charts in the OpenAI State of Enterprise AI 2025 report shows a growing divide not between companies that use AI and those that don’t, but between how deeply people actually engage with it. Even among active ChatGPT Enterprise users, a significant share have never touched some of the most capable tools. Nineteen percent of monthly active users report they have never used data analysis. Fourteen percent have never used reasoning. Twelve percent have never used a search. Among daily active users, those numbers drop sharply — to just three percent, one percent, and one percent, respectively.

This gap says less about willingness and more about fluency. People who work with AI every day don’t just ask more questions — they explore more capabilities. They learn what the system can do, and as a result, they extract more value from it. Those who engage occasionally tend to stay on the surface, using AI as a faster search bar or a writing assistant, without ever crossing into analytical or reasoning-heavy workflows. The result is a quiet inequality of outcomes. The same tool exists across the organization, but the returns concentrate where usage is frequent, contextual, and embedded into real work.

This is an important reminder as we move toward more agentic systems. Autonomy doesn’t emerge in a vacuum. It builds on habits, trust, and familiarity. Organizations that want AI agents to act meaningfully on their behalf can’t treat advanced capabilities as optional or hidden behind novelty. They need environments where AI is part of everyday workflow, not an occasional experiment. The divide we see today between monthly and daily users is likely a preview of a much larger one — between teams that integrate AI into how work actually happens, and those that never quite move past the basics.

How Agentic Workflows Unlock ROI

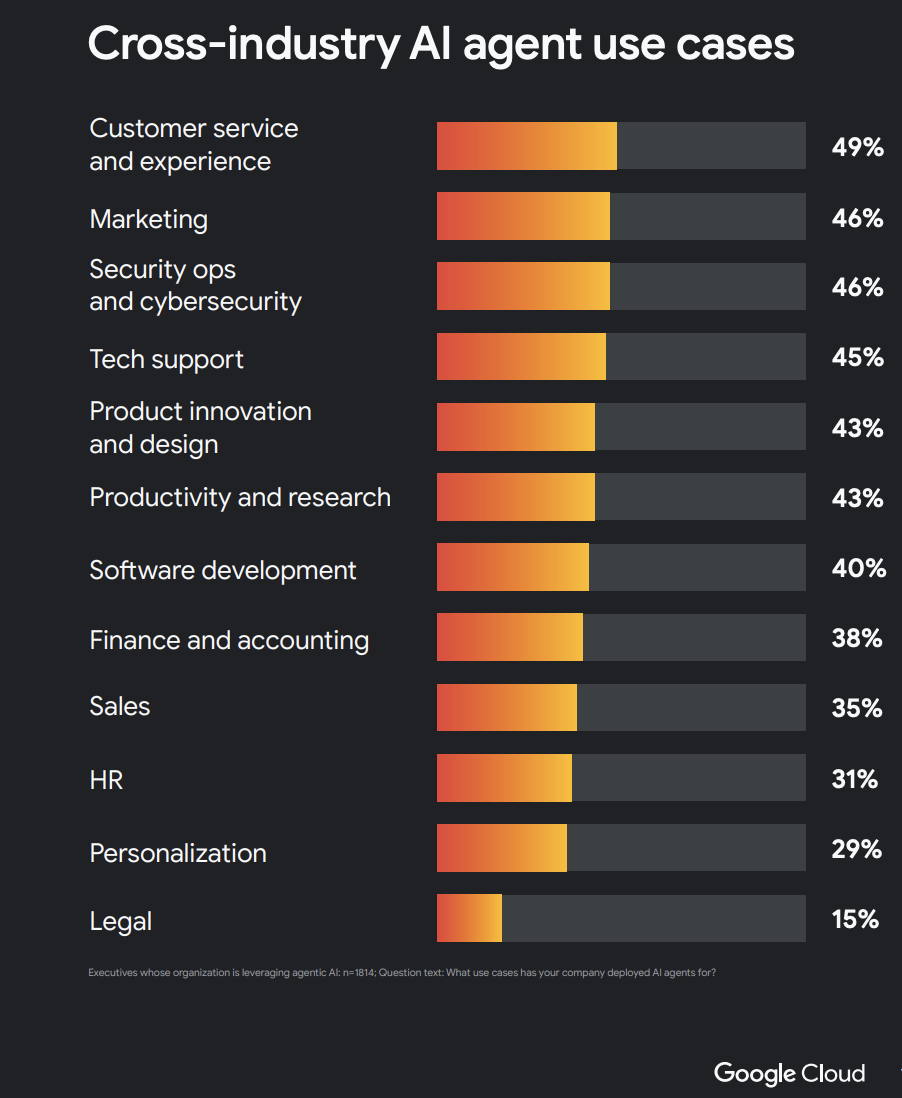

One of the most useful things about the Google Cloud ROI of AI 2025 report is that it doesn’t try to convince readers that AI is valuable in theory. It focuses on where value is already being realized in practice — across industries, regions, and organizational sizes. Taken together with other reports we’ve discussed, it reinforces a simple idea: AI ROI is not evenly distributed, and it doesn’t come from experimentation alone. It comes from focus, maturity, and integration.

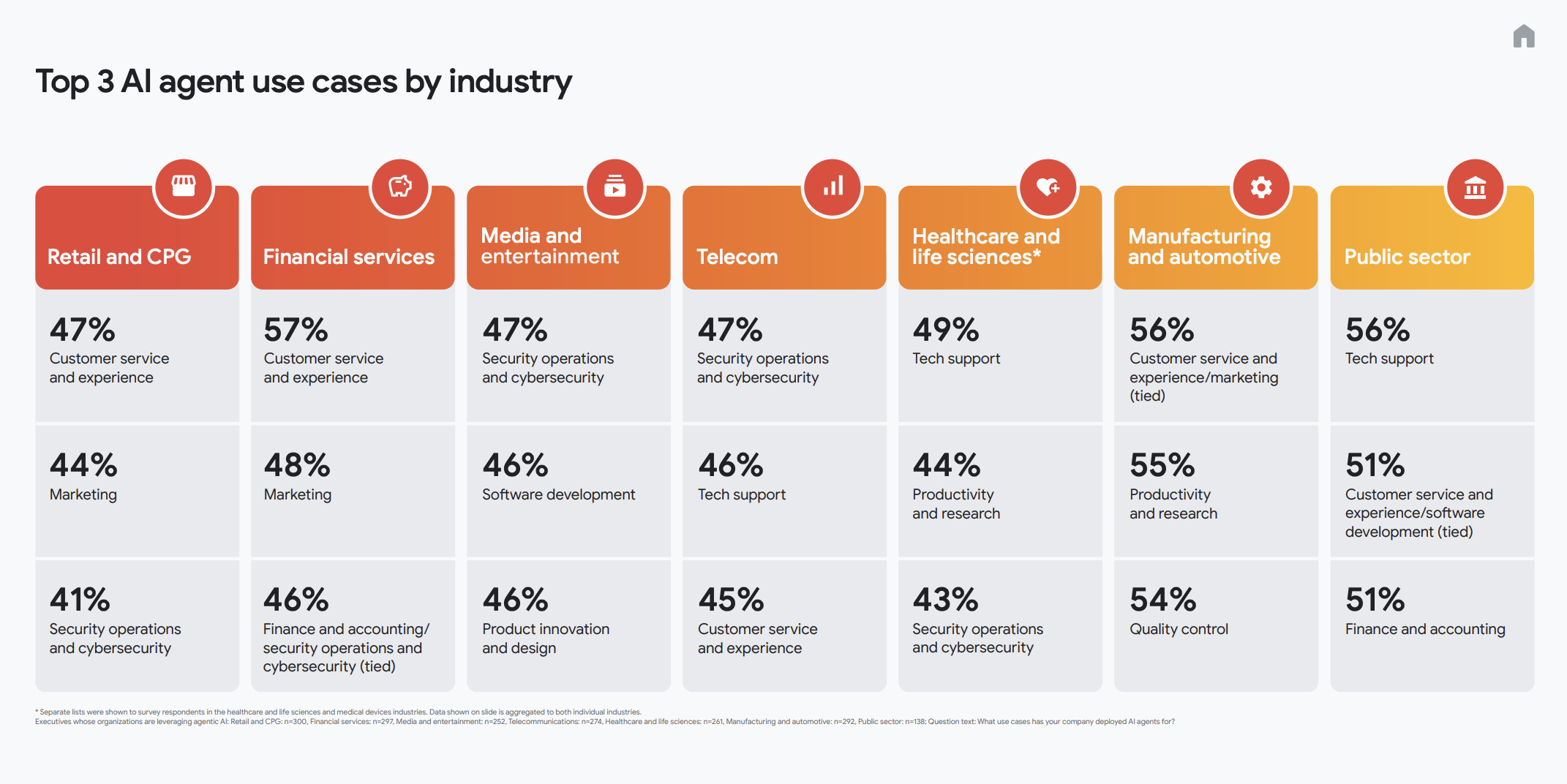

Across industries, the strongest returns from AI agents and automation consistently appear in a few areas:

- Customer service and experience. This remains the most common and most mature agent use case across sectors — from retail and financial services to manufacturing and the public sector. Organizations report faster resolution times, higher customer satisfaction, and reduced operational load on human teams.

- Marketing and growth operations. AI agents are increasingly used to execute campaigns faster, personalize messaging, and support content production at scale — not as creative replacements, but as force multipliers.

- Security operations and tech support. In media, telecom, healthcare, and IT-heavy environments, AI agents play a growing role in monitoring, triaging, and responding to issues before they escalate.

- Productivity and research. From engineering to R&D, AI agents are helping teams move faster through analysis, documentation, testing, and iteration cycles.

What’s notable is how consistent these patterns are across regions and company sizes. Agent adoption is no longer limited to Silicon Valley or early-stage startups. According to the report, adoption rates are already comparable — and in some cases higher — in Europe, the Middle East, and parts of Asia-Pacific, as well as across mid-sized and large enterprises. This reinforces a theme that’s been emerging across multiple sources: AI maturity is no longer about access to technology. It’s about operational readiness.

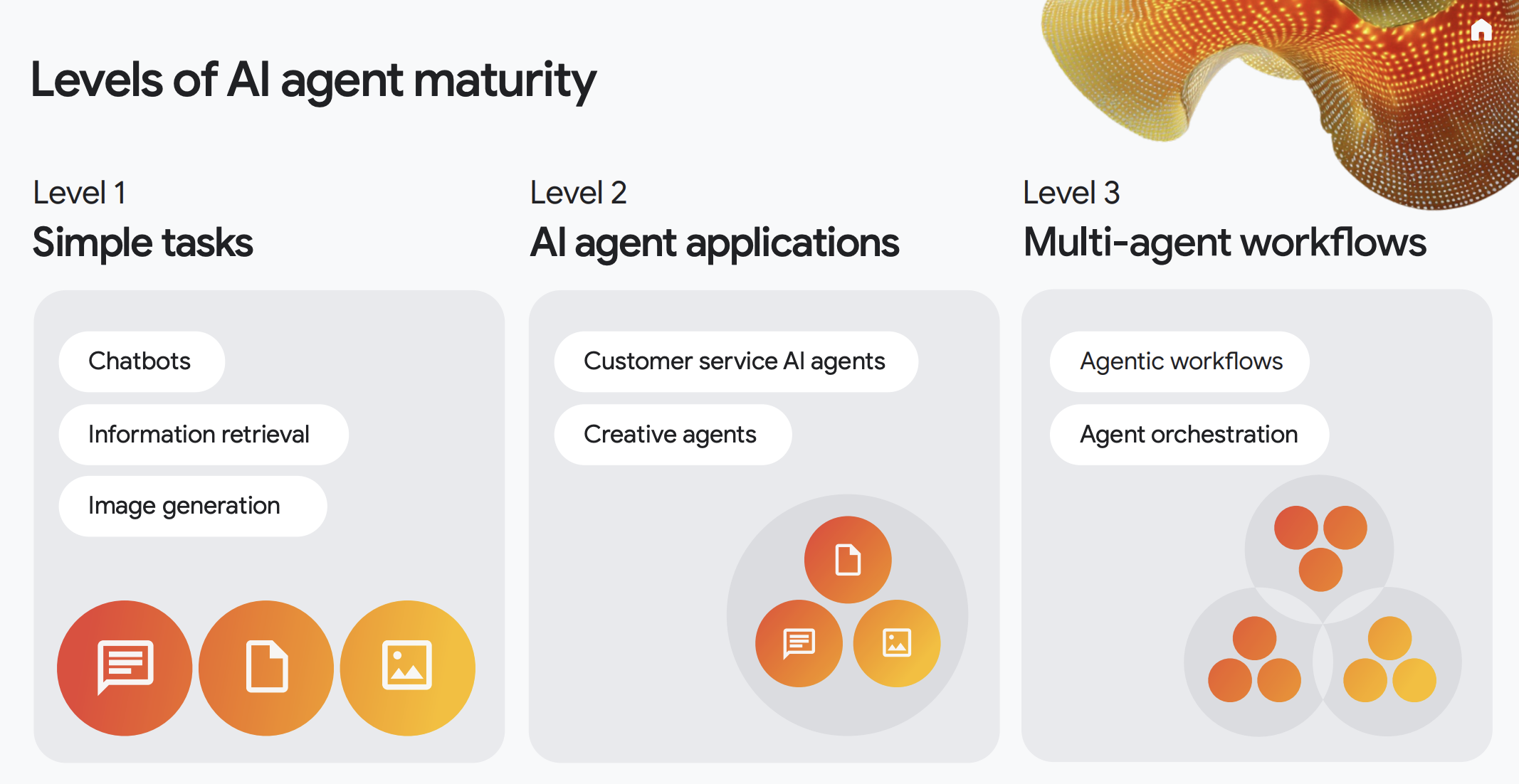

The report also outlines a clear progression in agent maturity:

- Level 1: Simple tasks, such as chatbots, information retrieval, and basic generation.

- Level 2: AI agent applications, including customer service agents and creative assistants embedded into workflows.

- Level 3: Multi-agent workflows, where agents coordinate with each other, orchestrate tasks, and operate across systems end to end.

The strongest ROI appears at the higher levels — not because the technology is more impressive, but because the integration is deeper. Organizations that move beyond isolated use cases and toward orchestrated workflows see compounding benefits: fewer handoffs, less manual coordination, and faster execution across teams.

For me, this is the most important takeaway. AI delivers returns not when it is sprinkled across an organization, but when it is designed into how work actually happens. ROI follows coherence. It follows focus. And it follows maturity.

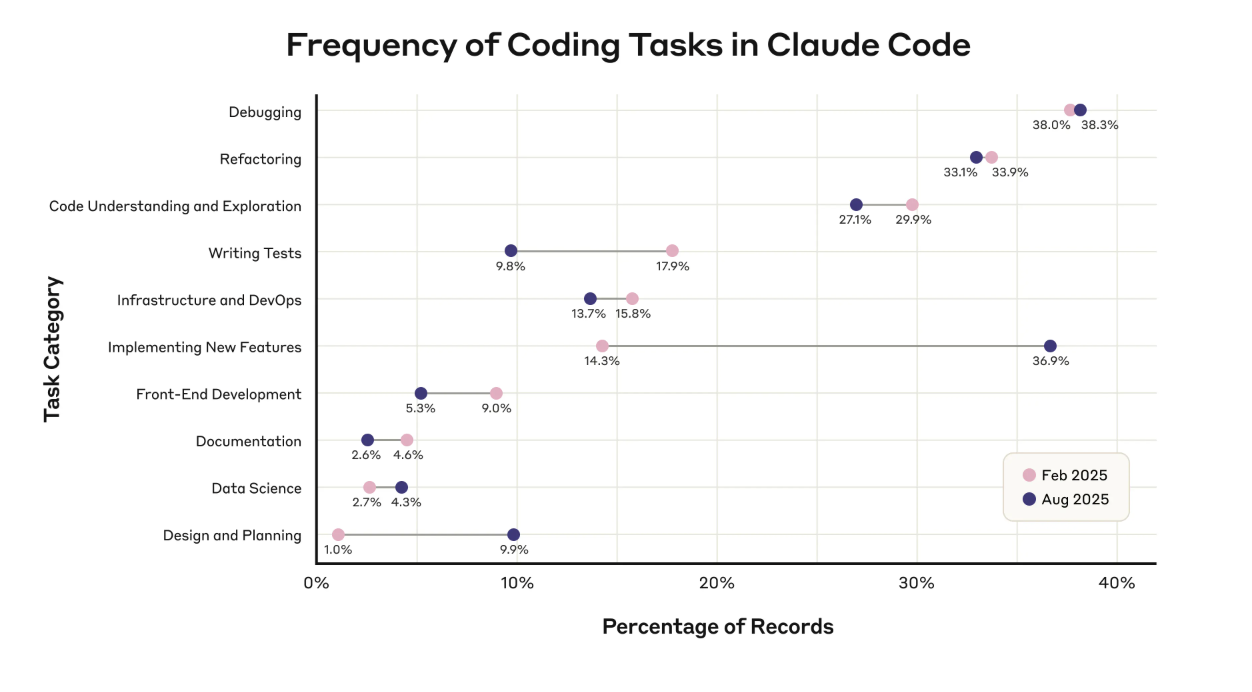

AI Agents Are Taking On More Complex Coding Tasks

After looking at where AI agents deliver value across industries, the next question becomes more subtle: what changes inside the work itself when agents mature? One of the clearest answers comes not from a vendor case study, but from Anthropic’s own internal research on how AI is transforming work at the company. Unlike surveys or executive interviews, this study looks directly at transcripts — at what people actually do with AI over time.

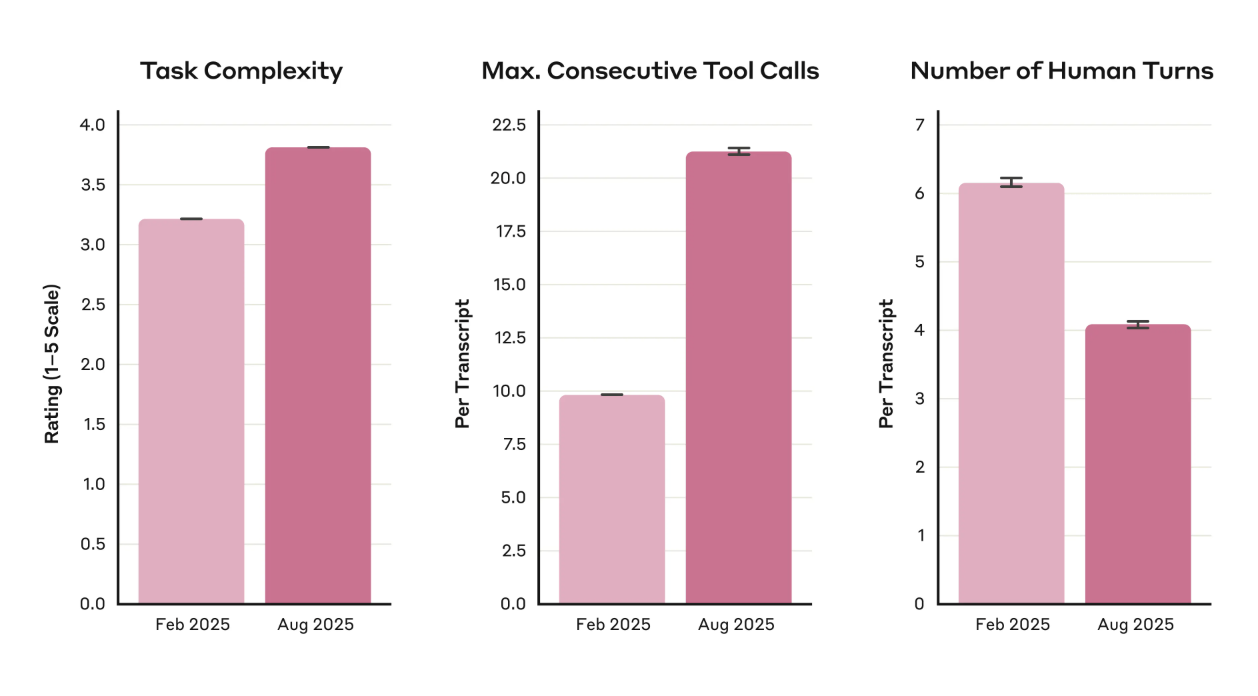

A clear pattern emerges: growing autonomy over time. Over just six months, Claude Code shifted from assisting with relatively bounded tasks to handling work that previously required sustained human expertise. Anthropic estimates task complexity on a five-point scale, where basic edits sit at the low end and expert-level work spans weeks or months of human effort. In February 2025, the average task complexity was 3.2. By August, it had risen to 3.8. In practical terms, that shift looks like moving from “troubleshoot a Python import error” to “implement and optimize caching systems” — not more prompts, but harder problems.

At the same time, the structure of collaboration changed. Claude Code began chaining together far more actions without interruption. The maximum number of consecutive tool calls per transcript more than doubled — increasing by 116%, from an average of 9.8 to 21.2 tool calls. These aren’t abstract reasoning steps; they’re concrete actions like editing files, running commands, and executing changes across systems. As agents became more capable, they stopped waiting for constant confirmation. They acted, checked their own work, and moved forward.

- Task complexity increased from 3.2 to 3.8 on a 1–5 scale

- Consecutive autonomous tool calls rose from 9.8 to 21.2 (+116%)

- Human turns dropped by 33%, from 6.2 to 4.1 per transcript

The most important signal: fewer human turns. And it means less micromanagement. People are still defining goals and reviewing outcomes, but they’re no longer required to guide every intermediate step. This is what agentic maturity looks like in practice: not speed alone, but confidence. Confidence that systems can carry intent forward, handle complexity, and return results without constant supervision.

Read next to the Google Cloud ROI data, the Anthropic findings add an important dimension. ROI doesn’t emerge simply because agents exist. It emerges when agents are trusted with harder work, allowed to operate with continuity, and embedded deeply enough that human attention becomes a strategic input rather than an operational bottleneck. That combination — autonomy, complexity, and reduced oversight — is where agentic systems stop being impressive and start being indispensable.

Why AI Is Now Critical — at Every Company Size

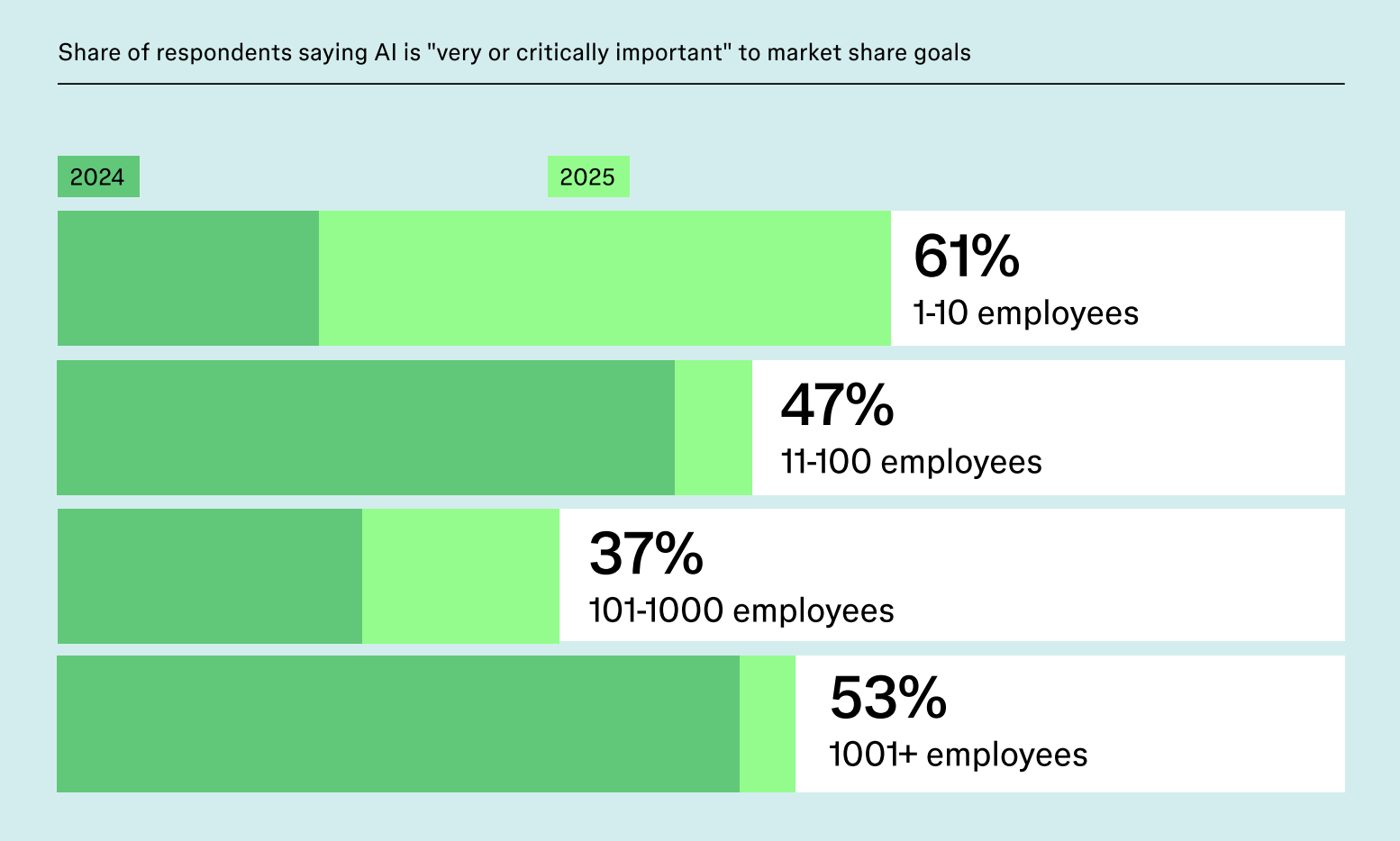

One of the clearest signals that AI has moved from experimentation to strategy comes from an unexpected place: company size. According to Figma’s 2025 AI Report, organizations of every scale now see AI as more critical to revenue growth, market share, and competitiveness than they did just a year ago. What’s striking is not that large enterprises care about AI — that was already expected — but how aggressively smaller companies are leaning into it.

In 2025, 61% of respondents from companies with 1–10 employees say AI is “very or critically important” to their market share goals. That number drops to 47% for companies with 11–100 employees and 37% for mid-sized organizations, before rising again to 53% among enterprises with more than 1,000 employees. In other words, AI importance now follows a U-shaped curve: it matters most to those who either move fastest or operate at a massive scale.

Even more revealing: the pace of change. Small companies aren’t just adopting AI — they’re doubling down. Figma reports two- to three-fold year-over-year increases in how essential AI is perceived to be for small teams. Among Figma users at small companies, respondents were twice as likely to say that the majority of their work is now focused on AI projects compared to users at larger organizations. The share of small-company respondents who say AI is essential to their product doubled compared to last year, while perceptions among larger companies remained relatively stable.

This shift says a lot about where competitive pressure is coming from. Smaller teams don’t have the luxury of incremental gains. For them, AI isn’t about marginal efficiency — it’s about survival and differentiation. AI compresses time, reduces the need for large headcounts, and allows small organizations to operate with a level of sophistication that previously required scale. As a result, AI is no longer just a productivity enhancer. It’s becoming a prerequisite for staying relevant in the market.

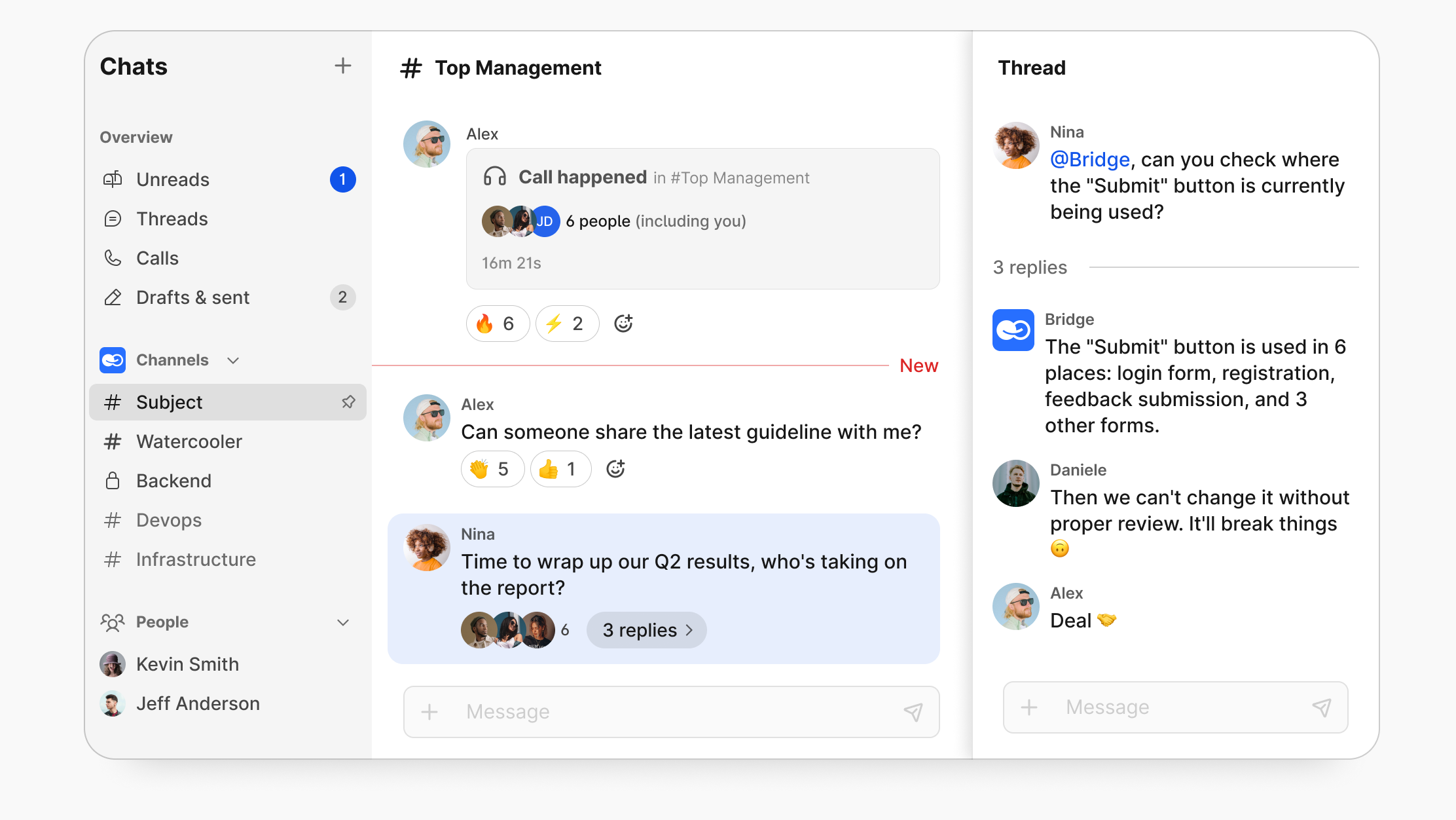

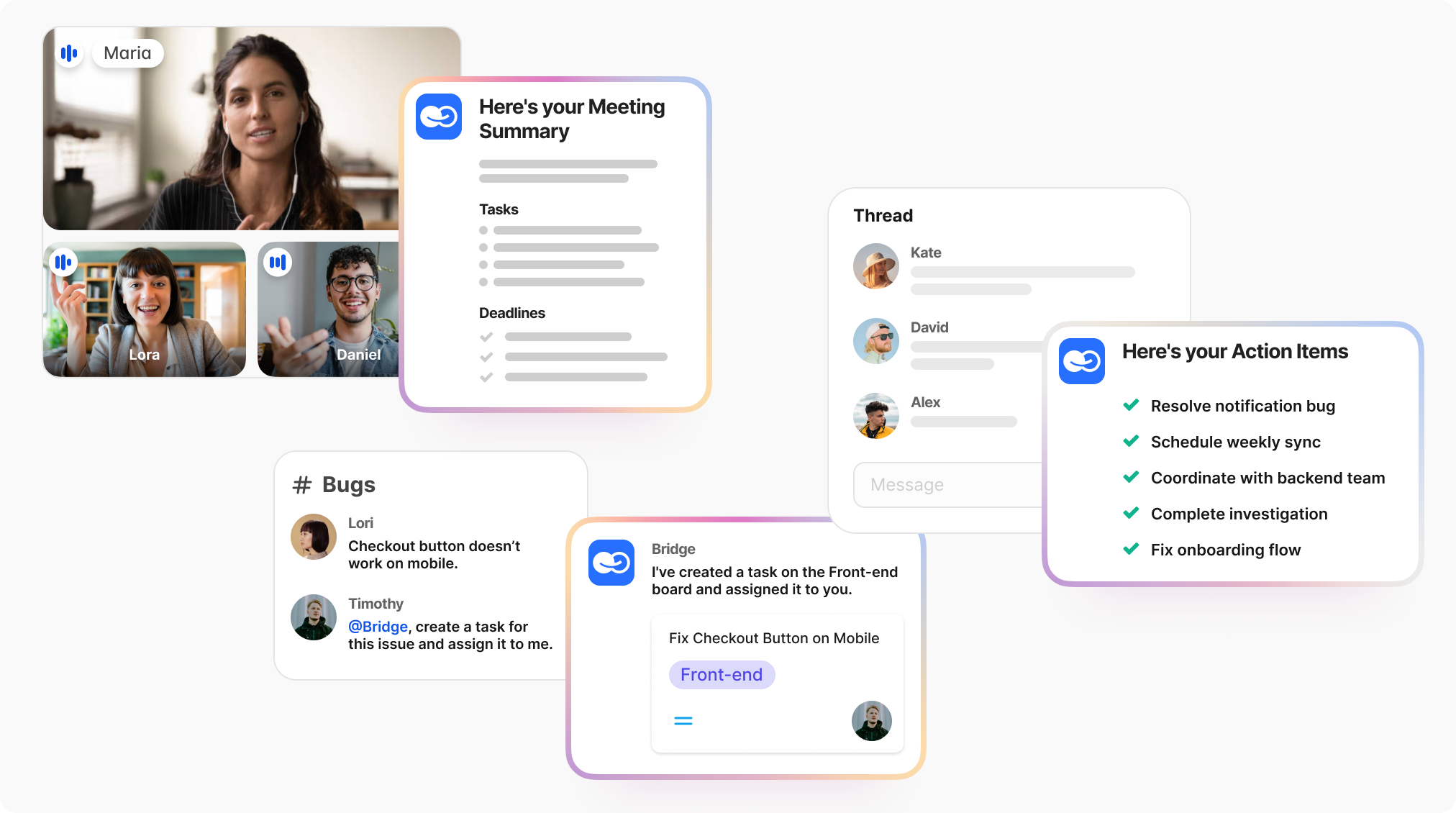

Bridge Copilot: Copilots Work Best Where Work Already Lives

At Bridge copilot becomes useful when it lives inside the actual flow of work — where conversations, tasks, documents, and decisions already happen. That’s why Bridge Copilot isn’t “for IT,” or “for managers,” or “for HR” in isolation. It’s for teams that work together across contexts and need continuity rather than another tool to manage.

Bridge Copilot supports teams by staying close to the work itself:

- Summarizes long discussions into clear, actionable insights, so teams don’t lose decisions inside threads.

- Creates and updates tasks directly from conversations, turning talk into execution.

- Maintains living documentation, keeping project knowledge aligned as work evolves.

- Reduces context switching between chats, tasks, docs, and dashboards.

- Keeps knowledge consistent across teams and projects, even as people and priorities change.

The key idea is simple: a copilot should reduce cognitive load, not add another interface to learn. It shouldn’t ask users to explain their context — it should already have it.

Microsoft’s Copilot Usage Report 2025 offers a rare look at how copilots behave once the novelty wears off and real work begins. The most important insight isn’t about which feature is most popular — it’s about time.

Across organizations, Copilot usage consistently clusters around moments of transition: preparing for meetings, catching up after time away, turning raw information into structured output, and moving from discussion to decision. People don’t turn to copilots for abstract experimentation. They turn to them when time is scarce and clarity matters.

This reinforces a broader trend we’ve seen across reports this year: copilots become indispensable not because they are powerful, but because they are present at the right moments. When embedded into the workspace itself — rather than layered on top — they quietly change how work feels. Less catching up. Less manual translation between systems. More forward motion.

Why this matters heading into 2026? Taken together, the signal is clear. Copilots are no longer “AI features.” They are becoming the interface through which teams understand, prioritize, and move work forward.

Looking Ahead to 2026

Taken together, these signals point to a different set of constraints emerging in 2026. In 2026, the defining challenges of AI won’t be about access to models or headline-grabbing capabilities. They will be about how well organizations adapt — structurally, operationally, and humanly. Deployment is already ahead of understanding. Tools are moving faster than habits. Systems are evolving faster than skills. This growing gap between what AI can do and how prepared people and organizations are to work with it is where the next set of competitive differences will emerge.

What follows in this chapter isn’t a list of predictions pulled from a single source. It’s a synthesis — a set of signals that repeat across Bridge Insights and research from Stanford, McKinsey, OpenAI, Google, Anthropic, Microsoft, and others. Taken together, they point to a future where success with AI depends less on novelty and more on design: how intelligence is embedded, governed, taught, and sustained over time.

When people lack fluency, they tend to use AI defensively — for simple tasks, safe prompts, and limited experimentation. When fluency grows, AI becomes collaborative: users explore reasoning, analysis, and more autonomous workflows. Stanford’s findings reinforce the idea that the human layer is now the slowest-moving part of the AI system. Not because people resist change, but because learning, trust, and institutional adaptation take time.

One of the quieter but most consequential shifts heading into 2026 is how product work itself is changing. Not in tools, but in structure. According to Figma’s 2025 AI Report, AI is no longer reinforcing traditional handoffs between design, product, and engineering — it’s actively dissolving them. As the report states, “AI is increasingly blurring the lines between design, product, and engineering, pushing teams toward more collaborative and interdisciplinary ways of working.”

This matters because it changes the unit of work. Product development is no longer a linear relay — design hands off to engineering, engineering hands off to product, product hands off to delivery. With AI embedded across the workflow, teams solve problems together, in a shared context, often at the same time. Designers prototype logic. Engineers shape user experience. Product managers test assumptions directly in tools. AI doesn’t replace roles — it compresses the distance between them.

Looking toward 2026, this interdisciplinary default becomes a competitive advantage. Teams that cling to rigid role boundaries will feel slower, not because they lack talent, but because their structure fights the way work now flows. The most effective product teams will be those that organize around problems and workflows rather than titles — supported by systems where communication, execution, and intelligence live in one place. AI accelerates this shift not by forcing collaboration, but by making separation inefficient.

When the Biggest Blockers Aren’t Technical Anymore

One of the most striking themes in McKinsey’s State of AI 2025 report is not about what technology can do — it’s about what organizations struggle to do with it. Three years into the generative AI era, McKinsey’s global survey shows that while nearly nine out of ten organizations report regular AI use in at least one business function and many have begun experimenting with agentic systems, most have not yet scaled AI across the enterprise or captured meaningful value at scale.

What stands out in the data isn’t a lack of tools. It’s that the technologies themselves are no longer the binding constraint. Adoption is broad — but deep integration, scaled impact, and measurable enterprise-level ROI are still rare. McKinsey’s findings reveal a persistent gap between using AI and realizing strategic value, with the majority of companies still stuck in experimentation or pilot stages rather than scaled transformation.

This gap isn’t primarily caused by algorithms or model quality. Instead, it reflects the organizational and operational challenges that emerge as AI becomes strategic. McKinsey highlights workflow redesign and enterprise-wide integration as crucial success factors — and implicitly suggests that barriers such as fragmented workflows, unclear ownership of AI initiatives, and lack of internal transformation are more limiting than technical access to AI itself.

In other words, the biggest blockers to impactful AI adoption are now organizational design, governance, and operating models, rather than infrastructure or compute capabilities. Organizations that succeed are those intentionally redesigning workflows, aligning leadership with AI strategy, and treating AI as core infrastructure rather than isolated tools. That shift is itself one of the defining trends heading into 2026.

Compute and Energy Are Becoming Strategic Constraints

One of the quiet but decisive shifts highlighted in the Stanford AI Index Report 2025 is that compute is no longer a purely technical consideration. It is becoming a strategic one. As AI systems scale, the report shows sustained growth in computational requirements, energy consumption, and overall costs associated with both training and inference. Progress continues — but it is no longer free, invisible, or abstract.

The Stanford AI Index documents how leading AI models now require orders of magnitude more compute than just a few years ago, alongside a corresponding rise in energy usage and infrastructure demand. This changes the nature of decision-making around AI. Questions that once belonged to engineering teams are moving into boardrooms: where inference runs, who controls the hardware, how predictable the costs are over time, and what trade-offs organizations are willing to make between performance, efficiency, and sovereignty.

“New research suggests that machine learning hardware performance, measured in 16-bit floating-point operations, has grown 43% annually, doubling every 1.9 years. Price performance has improved, with costs dropping 30% per year, while energy efficiency has increased by 40% annually”

What’s important is not just the scale, but the asymmetry. Organizations with access to stable infrastructure, long-term compute planning, and optimized deployment strategies gain compounding advantages. Others face rising marginal costs that make large-scale AI deployment harder to justify. In this environment, compute stops being a background assumption and becomes a limiting factor — one that directly influences which AI strategies are viable and which remain theoretical.

This is where energy enters the conversation as well. The AI Index highlights growing attention to the environmental and operational footprint of AI systems, not as a moral argument, but as a practical one. Energy availability, efficiency, and cost increasingly shape where and how AI can be deployed. As models become more capable, the ability to run them sustainably — and predictably — becomes just as important as model quality itself.

Taken together, these signals point to a broader shift. AI strategy is no longer only about choosing the right model. It’s about designing architectures that balance capability with control, performance with efficiency, and innovation with long-term feasibility. Compute and energy are no longer implementation details. They are strategic constraints — and, for organizations that plan well, strategic advantages heading into 2026.

Where BridgeApp Fits in the 2026 AI Landscape

When you step back and look at these shifts together — agentic systems taking on harder work, copilots becoming daily interfaces, product teams turning interdisciplinary, compute and governance becoming strategic, and workforce adaptation lagging behind deployment — one thing becomes clear.

AI is now embedded deeply enough that fragmentation becomes dangerous. When intelligence lives across scattered tools, clouds, and workflows, organizations lose visibility, control, and trust. When it lives inside a coherent system, AI becomes something teams can actually work with — not just experiment around.

The teams that moved early were right. But speed alone doesn't guarantee success. What separates the organizations capturing real value from those still experimenting is structure: unified systems, clear governance, and architectures designed for long-term control rather than short-term novelty. The winners won't be those chasing every new capability. They'll be the ones who built systems early enough to let intelligence grow without losing clarity, ownership, or control. That's the work ahead. And it starts with asking not what AI can do, but where it should live.

This is the philosophy behind BridgeApp.

BridgeApp was built as an intelligent workspace where communication, collaboration, and AI-powered automation exist in one secure environment. Not layered on top of work, but woven into it. Teams don’t have to explain their context to AI — it already lives where decisions are made, tasks are created, and knowledge evolves.

As organizations move into 2026, three capabilities matter more than ever:

- Digital sovereignty. Bridge can run fully on-premise or in a private cloud, giving organizations control over where their data, models, and inference live — an increasingly critical requirement for governments, regulated industries, and global teams seeking independence from U.S. cloud monopolies.

- Extensibility and adaptability. AI in 2026 won’t look the same across teams or industries. BridgeApp is designed to adapt — with customizable agents, workflows, skills, and modules that evolve alongside both business needs and state-of-the-art models.

- Unified context. Agents, copilots, documents, tasks, databases, and conversations all live in one system. This shared context is what allows AI to move from assistance to execution — and from experimentation to trust.

BridgeApp isn’t a promise about the future. It’s an answer to the present reality of AI at scale. A workspace designed not just to adopt intelligence, but to govern it, extend it, and make it usable over time.

In 2026, the organizations that succeed with AI won’t be the ones chasing every new capability. They’ll be the ones who designed their systems early enough to let intelligence grow without losing clarity, ownership, or control.

That’s the work BridgeApp is here to support.