Best AI-Driven Knowledge Base Software 2026

Traditional knowledge bases were artifacts of a human-centric era. They were built for the seekers and the readers—biological processors trying to index information at the speed of sight.

As the digital landscape matured, the requirements evolved in lockstep: first, we needed basic keyword matching; then, we graduated to the organized chaos of tagging. But the real pivot happened in the first quarter of the 21st century. (There’s a certain retro-futurist delight in finally referring to that era as "the past," isn’t there?) That was the dawn of AI search—the moment we stopped indexing letters and started mapping meaning. Yet, even now in 2026, vector databases and knowledge engines remain a curious exoticism in some legacy corners. While the avant-garde has moved toward semantic architecture, some are still hunting for needles in haystacks using 2015 magnets.

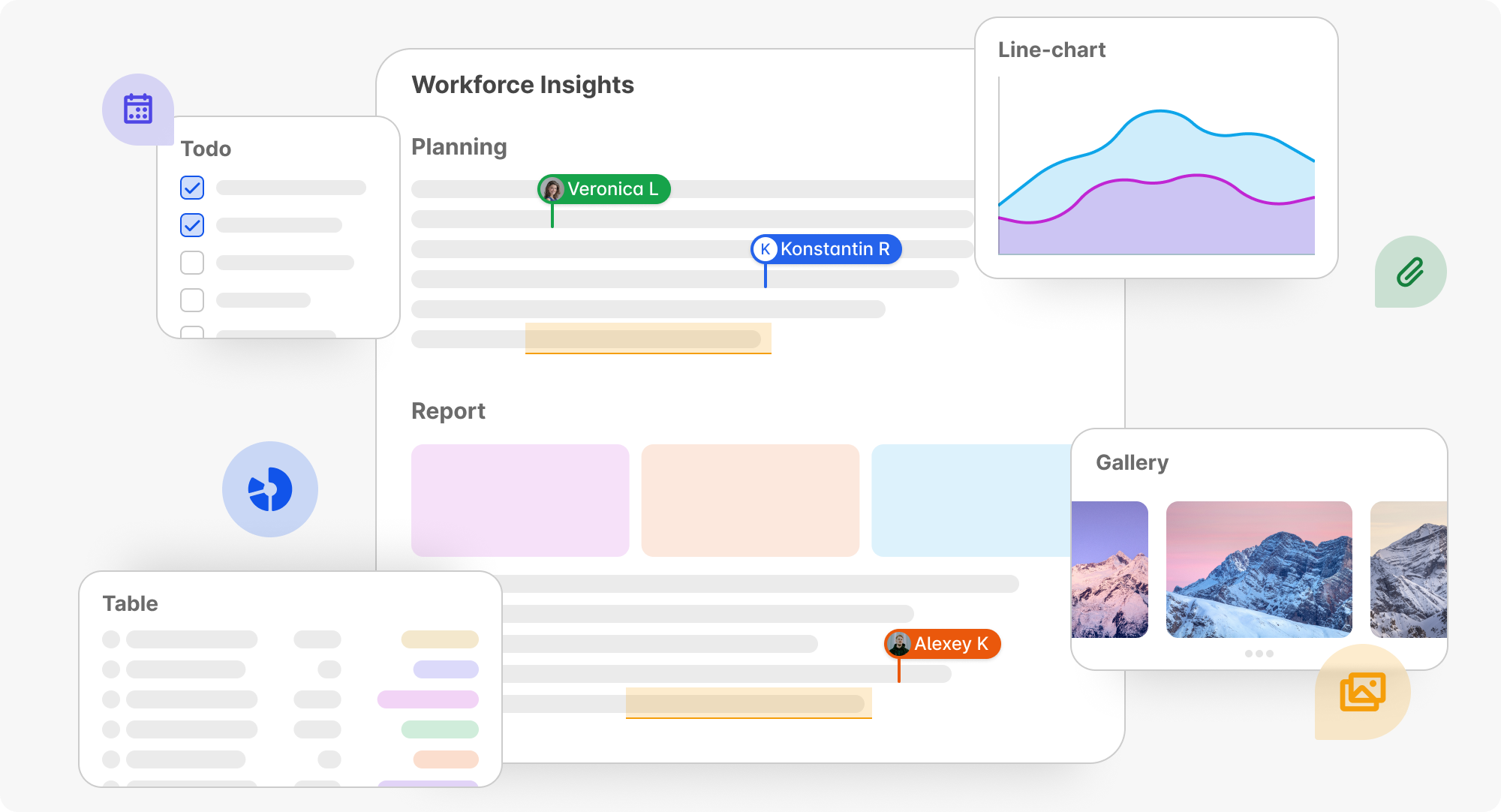

As we entered into the second quarter of the 21st century (and yes, I’m still savoring the time paradox) the landscape has shifted. Teams are no longer just groups of humans; they have become hybrid. We’ve moved beyond simple "advisors" to a world populated by AI Copilots, agents, and neural teammates—a marching workforce that doesn’t just suggest, but acts. For them, a knowledge base is no longer a static reference library; it has mutated to an operating environment.

Because agents don't just answer questions; they execute. And for an AI agent to execute a task with any degree of reliability, the integrity of its context is mission-critical. In this new architecture, the Knowledge Base serves as the long-term Organizational Memory (the persistent DNA of the enterprise, mission, intentions, and meanings it spreads across), while the live database acts as the short-term working memory—where the "now" is processed.

So, the yardstick for a Knowledge Base has been recalibrated. It is now the persistent, evolving horizon of the hybrid team. It allows humans and agents to move in sync, grounded in a shared reality that spans everything from foundational blueprints and action templates to the granular history of every pivot and decision. It enables the entire hybrid team to operate with a shared understanding of the context, including policy documents, action templates, decision history, and so forth, and to base their actions on this context. If your knowledge hub isn’t providing actionable grounding for both man and machine, it’s not useful.

The modern Knowledge Base (KB) is no longer a static repository; it’s a living interface that must satisfy a dual mandate: it must be "comfortable" for silicon and carbon alike. For agents, “comfort” means structured context, semantic precision, and executable logic. For humans, comfort (now without quotation marks) means content structure, UX, and readability.

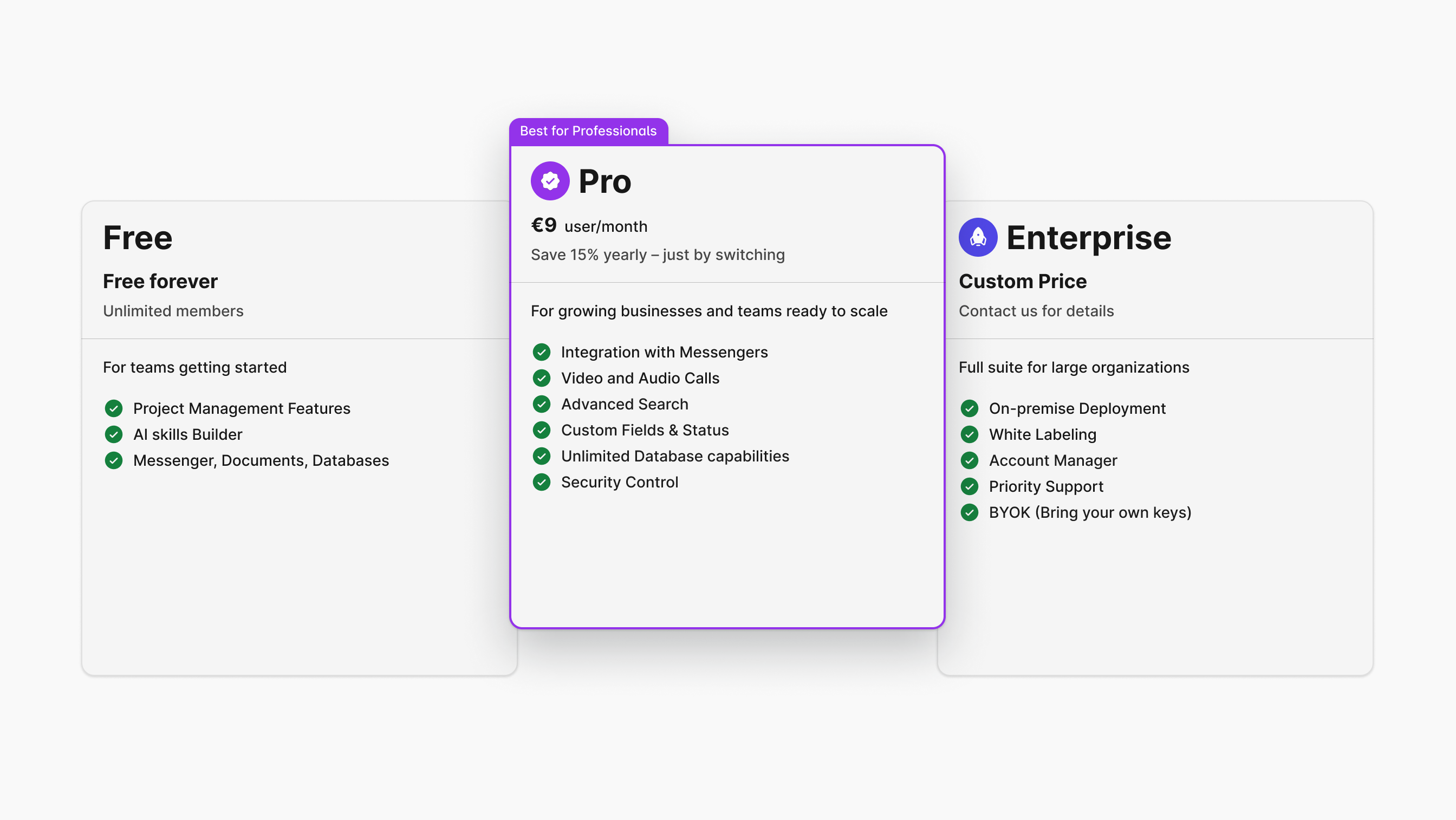

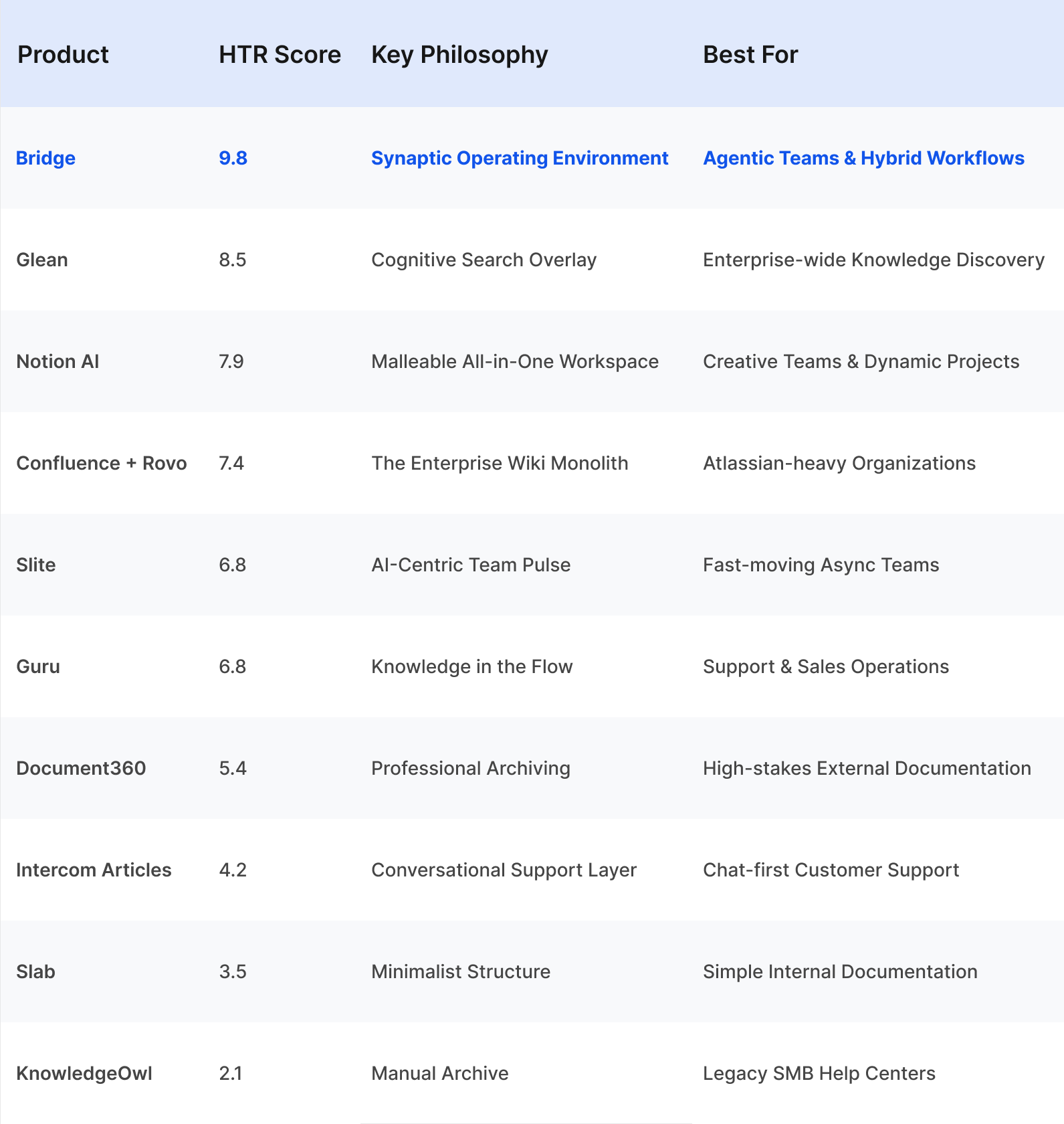

To evaluate the current landscape, we later use the Hybrid Team Readiness (HTR) metric. HTR measures how effectively a platform bridges knowledge gaps—the friction that occurs when a human makes a decision and an AI agent has to carry it out without constant hand-holding.

Here is how the heavy hitters stack up in early 2026.

AI search is a new ‘minimum viable’ (but still insufficient)

The classic knowledge stack—pages, hierarchical folders, and a search bar—was built on a specific world model: Knowledge equals text, and context equals folder location. In this paradigm, the "solution" always resides in the human brain. The knowledge base software is just a filing cabinet with a better index.

But for an LLM or an autonomous agent, this architecture is a "source of truth" built on shifting sand.

Even with vector search, most legacy systems remain passive. They are libraries waiting for a visitor. You (or your agent) must go there, ask the right question, and hope the retrieved document isn't a ghost from a 2024 sprint.

The friction here isn't speed; it's isolation. In the "Hierarchy Class" knowledge bases (page-and-folder-based like Notion, Slab, Confluence), information is severed from the live wire of work—the chats, the tasks, the real-time pivots. An agent might find a "Policy.pdf," but it has no way to calculate that document’s "gravitational pull" on the current task in the tracker.

Key classes of knowledge base software

The taxonomy of knowledge management in 2026 is a hierarchy moving from static archives to living, agentic operating environments. To review and evaluate software classes on the market, we have to identify how these systems actually handle the "synapse" between knowing and doing.

The Raw Material Layer: Legacy Help Centers

Systems like Zendesk Guide, Help Scout Docs, and Intercom Articles are the archaeological layers of corporate intent. They provide decent "raw material" for LLMs to scrape and learn from, but remain static archives rather than operational memory. They capture the "what" of a product but almost never the "why" or, even worse, the "how it changed since January.” In this ecosystem, knowledge is a static product, not a living process. They are fine for training a customer support bot, but they lack the causal connective tissue required for a hybrid team to actually collaborate.

The Synthesis Layer: AI-Search and Answer Engines

We then move into the "Search + Synthesis" class, where players like Glean—and to a lesser extent, Notion AI and Confluence Rovo—attempt to solve the "access problem." Here, the Knowledge Base is treated as a set of sources to be indexed, synthesized, and served "here and now." This is a massive leap for human productivity, but for an autonomous agent, it’s still a bottleneck. In this paradigm, the agent doesn't "remember"—it "asks." It’s an interface of access, not a seat of intelligence. If the agent has to constantly query an external index to understand its next move, you haven't built a teammate; you've built a researcher with a short-term memory.

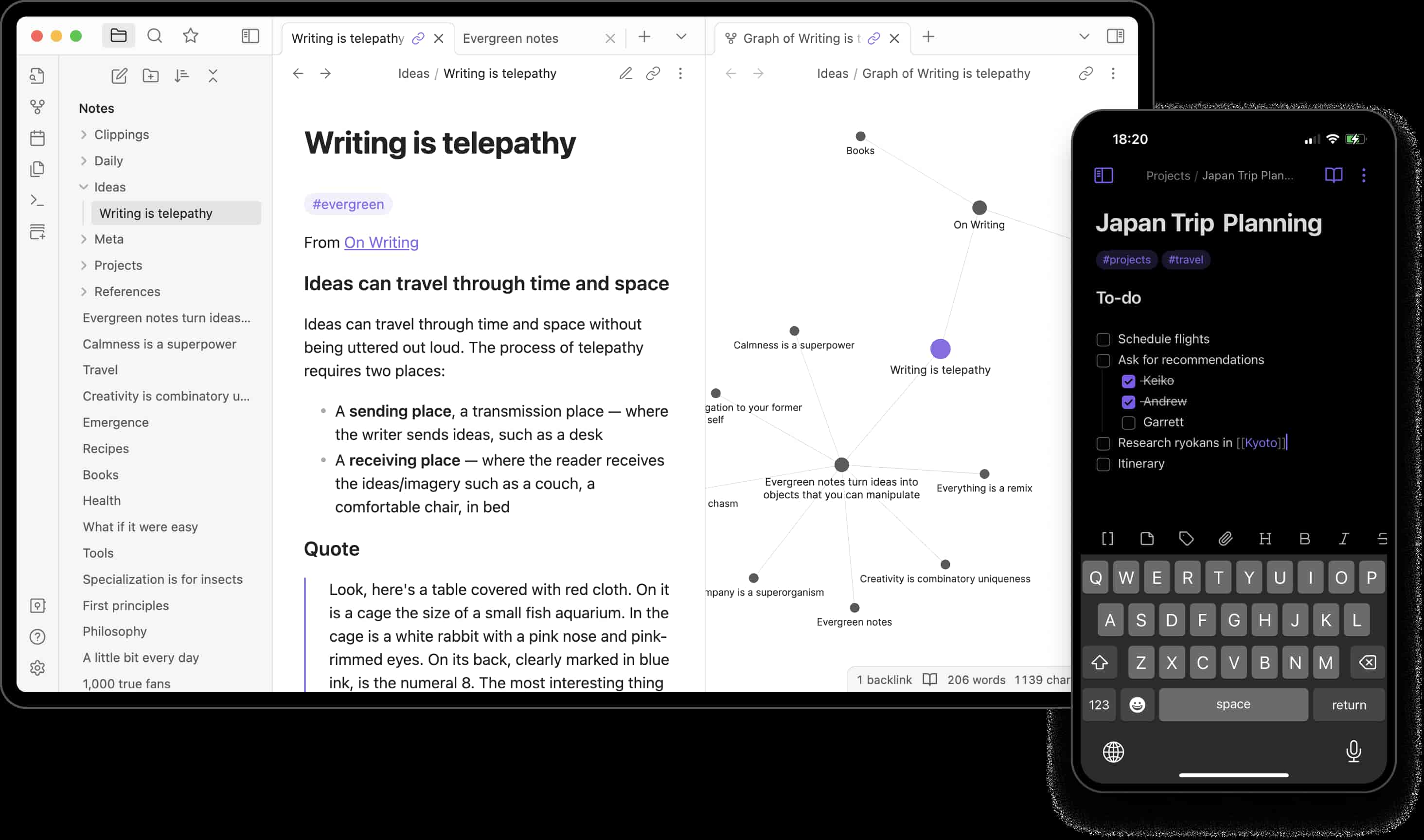

The Atomic Layer: Cognitive Playgrounds

There is a specific, high-IQ niche for personal knowledge systems like Obsidian and Mem. These tools focus on atomic notes, graph-based connections, and the "second brain" philosophy. They are unparalleled for human thinking and individual synthesis, but they notoriously fail at the team level. Why? Because Knowledge ≠ Action. A beautiful graph of interconnected ideas in Obsidian doesn't translate to a Jira ticket or a Slack pivot. These systems are cognitive playgrounds, almost entirely unsuited for the rigid requirements of autonomous agents or structured team processes.

The Flow Layer: Knowledge in the Stream

The most significant step toward the future has been "Embedded Knowledge" platforms like Guru. The logic here is sound: knowledge should appear exactly where decisions are made, reducing "search" in favor of "presence." This is knowledge as a layer inside the workflow. It's a move away from the "destination library" and toward the "contextual nudge." However, even here, we hit the ceiling of agency. Guru can tell a human the right answer to a client’s question, but it doesn't empower an AI agent to go out and solve the client’s underlying issue. It’s a map, not a vehicle.

The Final Frontier: Agent-Ready Operational Memory

This brings us to the rarest and most critical class: Context-Native Knowledge Bases, the category where BridgeApp is setting the pace. Here, the distinction between a "document" and a "task" evaporates. Knowledge is no longer just structured text; it is Operational Memory.

In an agent-ready system, every decision is stored as a trackable event. Documents, discussions, and task histories are woven into a single, fluid context that an agent can not only read but also write, update, and act upon. The agent doesn't just "ask" the knowledge hub for a file; the agent lives within the knowledge. This is the only architecture that fully satisfies the Hybrid Team Readiness (HTR) criteria (10 out of 10). It allows the agent to understand its role in a current sprint, realize when a process is out of date, and propose an update to the "Organizational DNA" in real-time. This is the shift from a library to a nervous system.

Comparative Analysis: From Legacy Help Desks to Agentic Environments

To navigate this shifting landscape, we evaluate the industry’s leading knowledge base suites through the Hybrid Team Readiness (HTR) Metric. This score quantifies the equilibrium between Agentic Agency—the AI’s capacity for autonomous execution—and Human Flow, the system’s ability to remain intuitive for the biological brain. The following unpacking reveals which tools have successfully transitioned into operating environments and which remain trapped in the legacy archive era.

Why the HTR Scores Differ: Essentials and Key AI features

While the full weighted formula is available upon request, the HTR score is anchored by four critical pillars:

- Architectural Accessibility: Is the interface optimized for all actors? This includes non-technical staff using mobile terminals as well as autonomous agents and neural networks accessing data via headless browsers or APIs.

- Semantic Density: Does the KB store mere strings of text, or does it utilize vectorized meaning? High scores require machine-readable intent and the preservation of causality (markdown support is the bare minimum; relational intent is the gold standard).

- Context Latency: How rapidly can the system update its "Long-term Memory" when a "Short-term" event occurs in the live workspace (e.g., a pivot in a Slack thread)?

- Agentic Agency: Can an AI model "plug in" via MCP (Model Context Protocol) to execute tasks, or is it restricted to a read-only chat interface?

Comparing AI-Powered Knowledge Base Solutions for Internal Knowledge Sharing

BridgeApp: The Context-Native Operational memory for AI agents

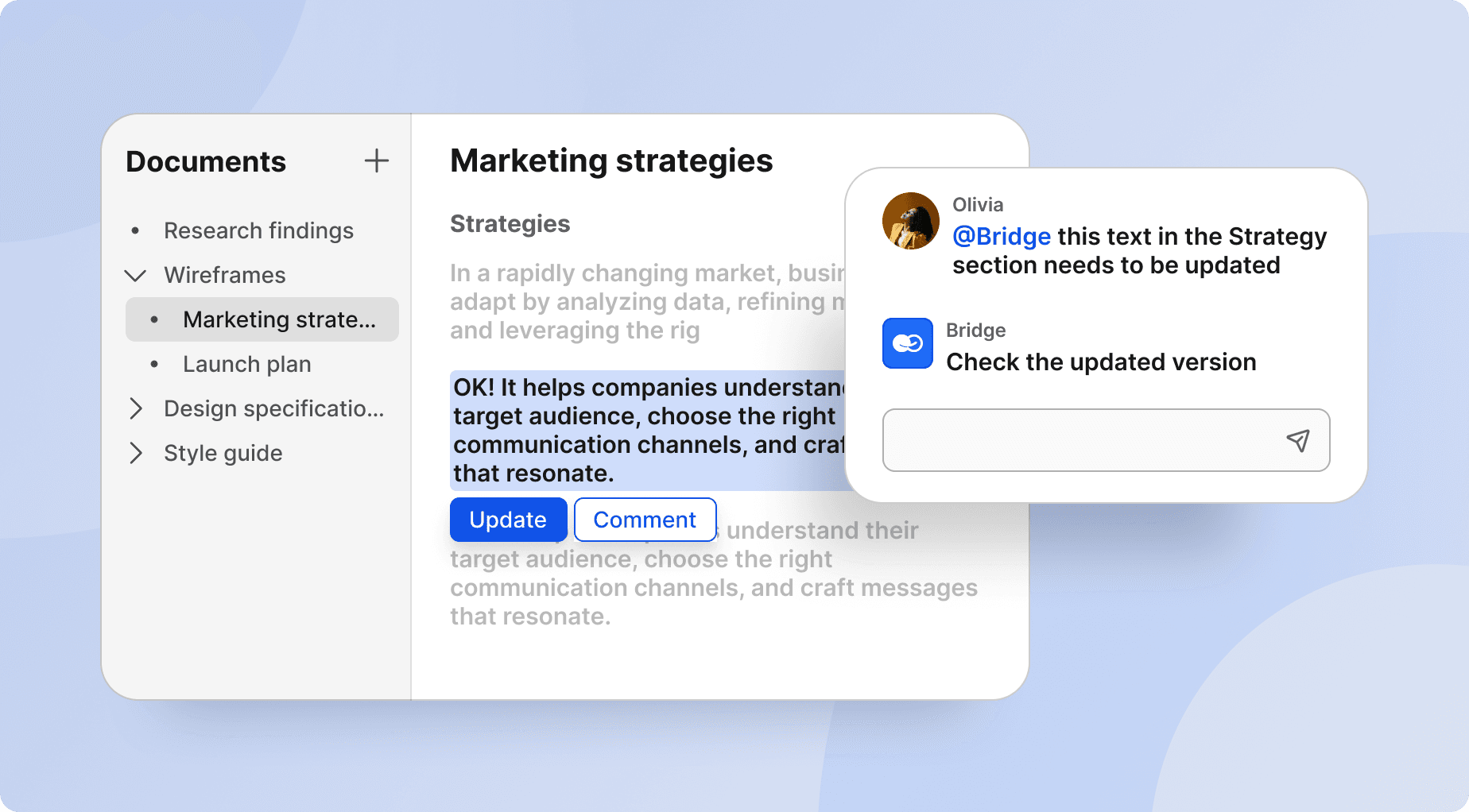

BridgeApp represents a fundamental shift from static storage to an 'Agentic Operating Environment.' Its core strength is the seamless consistency between the knowledge base, corporate chats, and task management. In this ecosystem, AI agents are first-class citizens, working on an equal footing with humans and operating on both persistent historical data and live, real-time streams. It is not just a repository; it is a consolidated operating layer that reduces tool sprawl. By replacing the current communications, project management, and knowledge base stack with BridgeApp, a business can save 50–70% of the costs it currently spends on those tools. BridgeApp then becomes an AI productivity layer where your organizational DNA is captured in context, shared across the hybrid team, and used to drive real execution—turning chaos into operational order.

The system features an advanced semantic engine that can understand documentation, identifying the specific functional purpose of every file—whether it is a casual note, a formal instruction, a regulation, or a legal contract. This structural intelligence, combined with robust role-based access control, ensures that both biological and artificial employees understand how documentation is partitioned across departments and which specific knowledge is contextually relevant to the task at hand. For enterprises requiring absolute data sovereignty, Bridge App offers a full on-premise deployment of the entire workspace. With real-time collaboration and deep-linking directly from chats and tasks, it eliminates the 'context gap,' ensuring that knowledge is never a destination, but a living part of the process.

Hybrid Team Readiness (HTR) Score: 9.8 / 10

Zendesk Guide: A traditional knowledge base for customer support

Zendesk Guide is a help center architecture built for organizing and deploying content, deeply embedded in the Zendesk support ecosystem. It is designed to host FAQs, reference articles, and customer-facing documentation. Its primary use case is ”Ticket Deflection”. The goal is to offload support volume by empowering customers to solve their own problems. It offers flexible customization via templates and code, though it requires an experienced administrator to truly harness it.

The trade-off is that formatting options are restrictive, and there is a total absence of content versioning. The built-in search feels like a legacy relic, making it surprisingly difficult to find the right articles. Users frequently report a confusing interface and slow response times from Zendesk’s own support.

AI: While marketing touts the "Answer Bot" as an autonomous support agent, users flag the AI integration as weak. These features are siloed within the Zendesk Suite rather than being a native, fluid part of the knowledge engine.

Hybrid Team Readiness (HTR) Score: 3.8 / 10

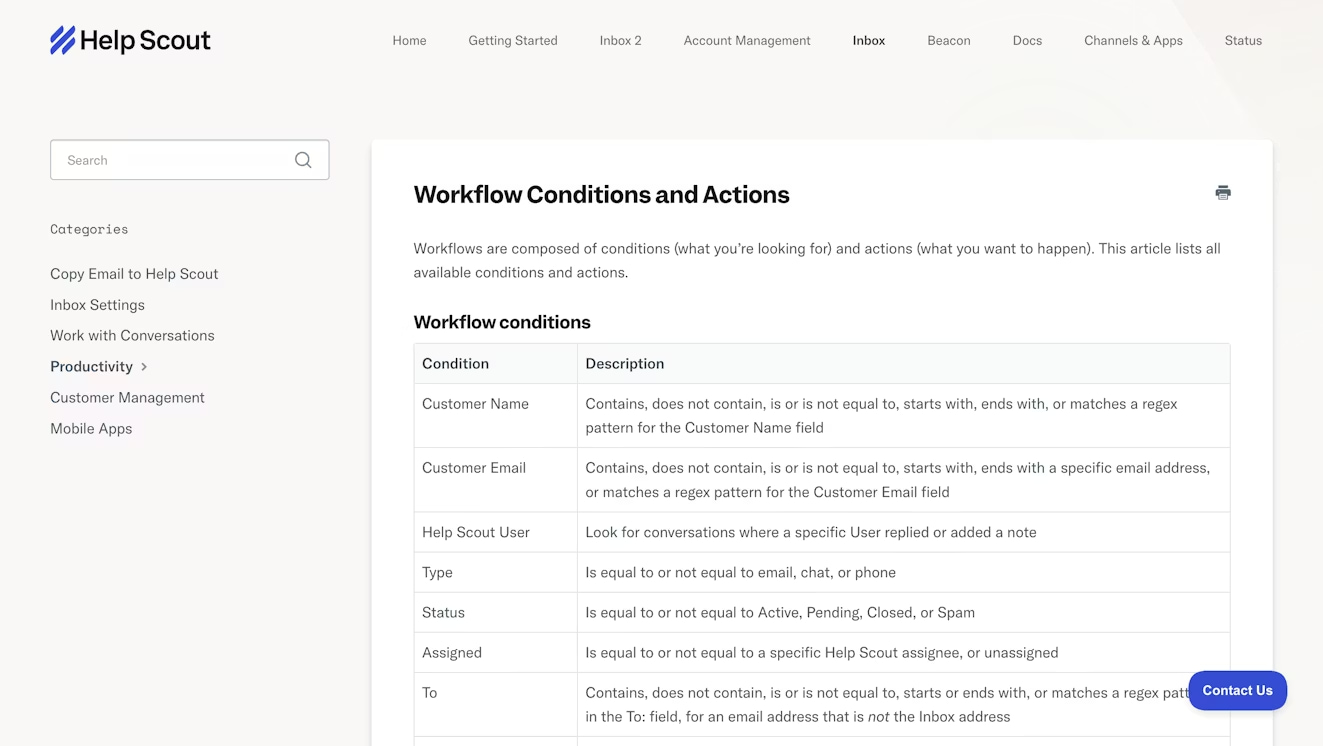

Help Scout Docs: The Lite-Touch

Help Scout Docs is the simple answer to customer self-service needs. It’s designed as a zero-friction entry point for small to medium teams who need to stand up an external FAQ without the architectural overhead of a full-scale enterprise system. The integration with Help Scout’s email and ticketing is its strongest suit, offering a smooth hand-off from self-service to a human conversation. However, you hit a functional ceiling early. It is "functionally basic" by design, which means limited scalability and a total lack of advanced features like version control, collaborative editing, or deep analytics.

AI: The AI effort is concentrated in the "Beacon" widget—a clever recommender that suggests articles based on customer context. While it helps with self-service, there is no deep LLM-based brain here. It’s has "suggestive assistants,” but not AI features or agents capable of independent reasoning or task execution.

Hybrid Team Readiness (HTR) Score: 3.4 / 10

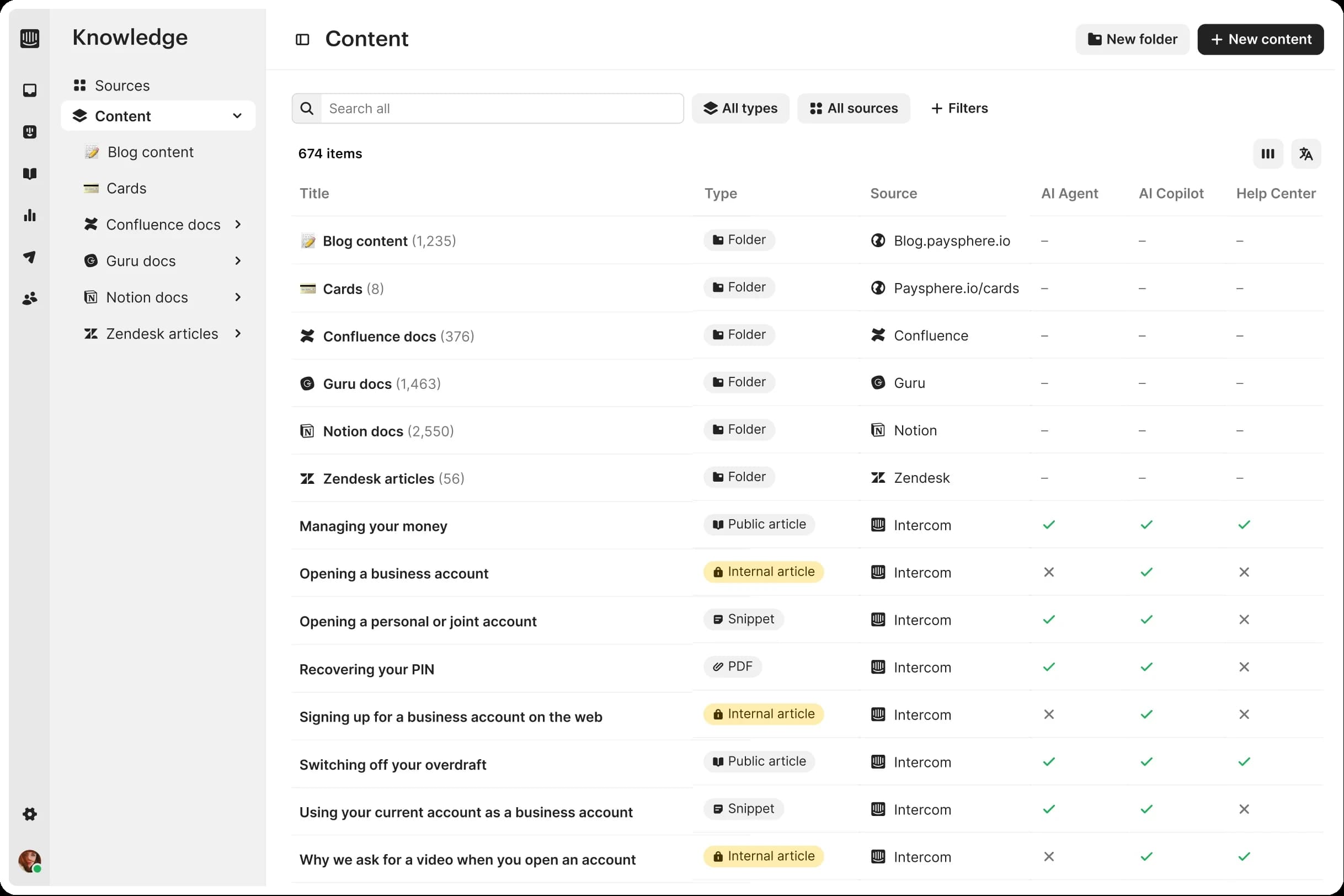

Intercom Articles: The Conversational Layer

Intercom Articles is a feature of the Intercom chat engine. It excels at delivering context-sensitive help exactly where the customer is—inside the chat bubble. For small teams with a "chat-first" support strategy, it effectively kills repetitive tickets by surfacing FAQ snippets during live conversations, creating a "unified space" where documentation and dialogue coexist.

As a standalone knowledge engine, though, it falls apart. The structural and formatting tools are primitive compared to dedicated KB platforms. There is a glaring absence of advanced governance features like version control or granular permissions. Because the entire architecture is optimized for brief, chat-ready snippets, managing large-scale documentation feels like trying to write a novel in a messaging app. It is essentially an expensive island: unless you are fully committed to the Intercom ecosystem, its utility drops to zero.

AI: Intercom has leaned heavily into Fin, a GPT-powered bot that treats the KB as a prompt-injection source to answer customer queries. While Fin is capable of synthesizing answers from articles and external data, the "AI Writer" and suggestion tools remain focused on the support agent’s efficiency. It’s a powerful loop for customer deflection, but the knowledge remains siloed—accessible to the support bot for answering, but not to the broader organizational workflow for acting.

Hybrid Team Readiness (HTR) Score: 4.2 / 10

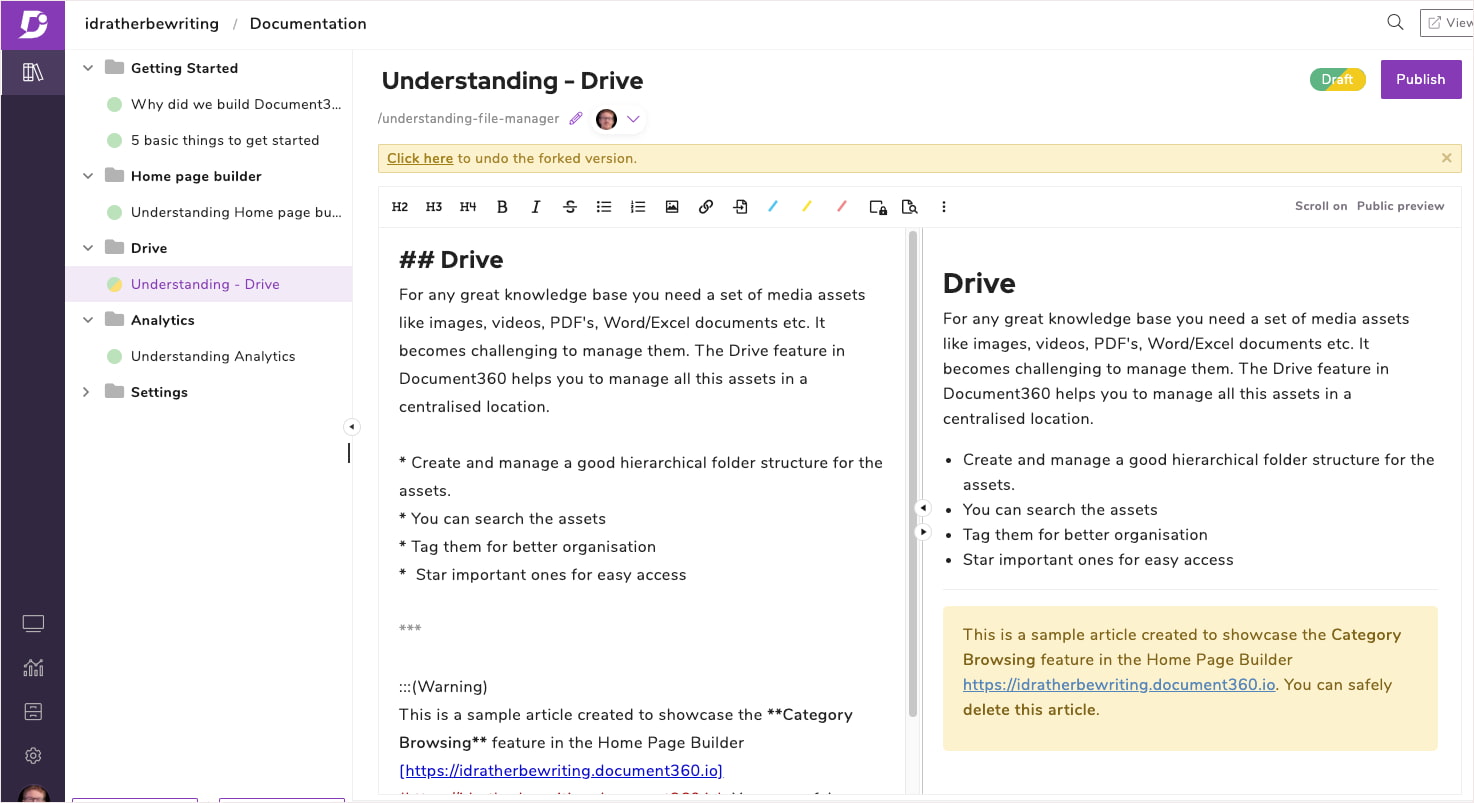

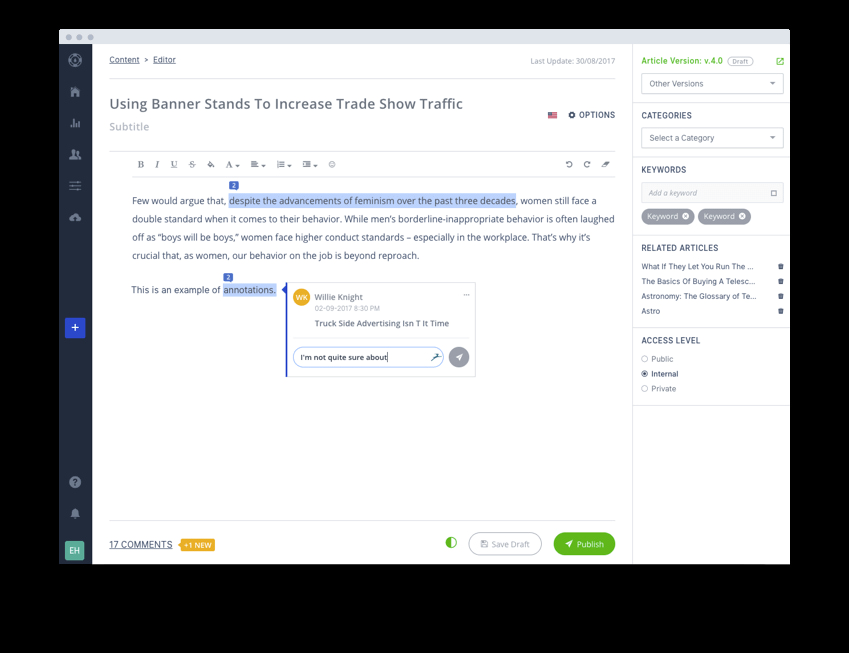

Document360: The Pro-Grade Content Creation Workflows

Document360 is the "grown-up" alternative to legacy help centers, built for teams that treat documentation as a high-stakes corporate asset. Unlike simplified "chat-first" repositories, it focuses on the entire lifecycle of knowledge: version control, formal approval workflows, and multi-layered hierarchies. It is a dedicated engine for those who need to scale to enterprise levels without losing track of their "Single Source of Truth."

The heavy focus on administrative management comes at the cost of creative agility. The absence of real-time collaborative editing—a staple of the modern workspace—makes the platform feel surprisingly isolated for a premium product. While the interface is intuitive, the editor can be temperamental with media and formatting, often forcing users into CSS surgery for basic design tweaks. For teams with massive datasets, the search functionality still requires heavy manual filtering to feel truly effective, despite claims of increased intelligence.

AI: The current AI implementation is primarily author-centric. It uses LLMs to sharpen writing and semantic indexing to improve search results, but the "Agent" experience remains an external graft via ChatGPT API. It is a system that helps you polish and locate your knowledge, but it lacks the native architecture to transform that knowledge into an autonomous action layer.

Hybrid Team Readiness (HTR) Score: 5.4 / 10

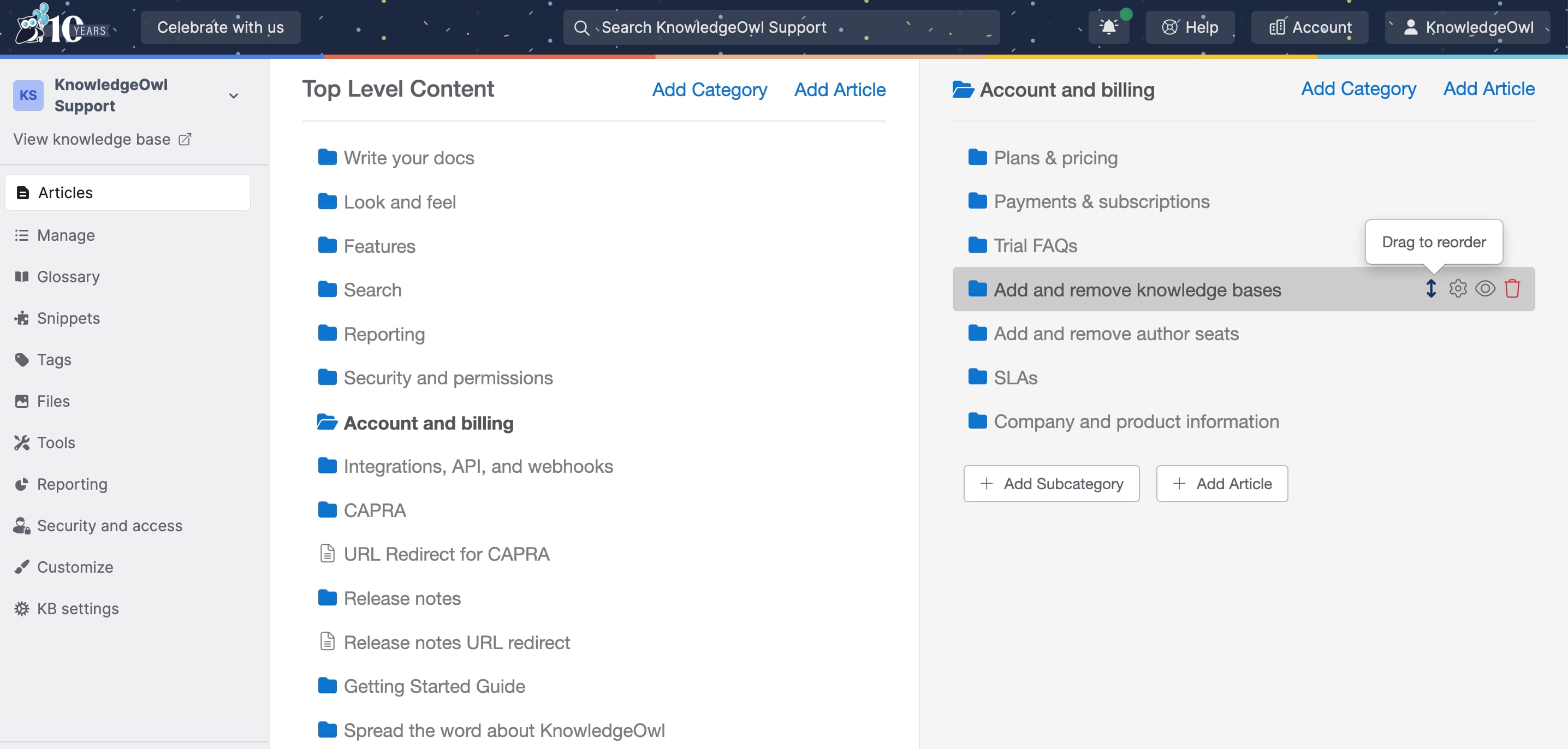

KnowledgeOwl: The Manual Architect

KnowledgeOwl is the "craftsman’s" entry in the legacy KB market, targeting SMBs that prioritize granular control over automated convenience. It positions itself as a highly customizable successor to HelpGizmo, offering a "best-in-class feature set for the price" for teams that need to build private or public help centers with a degree of structural flexibility that many "modern" tools lack.

But the platform’s flexibility comes with a steep "learning curve." While the basics are accessible, unlocking the true potential of its templates often turns into a coding exercise in HTML and CSS, alienating users who lack technical skills. More critical for data-driven teams is the "analytics void"—the built-in reporting is so rudimentary that it effectively forces users to export data to third-party tools just to understand how their knowledge is being consumed.

AI: KnowledgeOwl is a stark outlier in the current landscape, offering no native AI tools (except, seemingly, AI autocomplete in the search bar). There is no semantic search, no generative assistant, and no built-in agentic features. While you can duct-tape a ChatGPT widget to the front end through external integrations, the platform itself remains a pre-AI relic. It is a tool for those who believe knowledge management should remain an entirely human, manual craft.

Hybrid Team Readiness (HTR) Score: 2.1 / 10

Helpjuice: The High-Speed Help Bar

Helpjuice is a universal cloud-based platform that attempts to bridge the gap between help centers and internal team wikis. It’s a popular choice for SMBs that need a polished self-service portal without a long deployment cycle. Its search is notably fast, handling non-text assets like PDFs and images with ease.

This elegance comes with a practical friction. While the interface is clean, the editor is frequently cited as a source of frustration, with persistent bugs in complex formatting and lag when handling large documents. Although "Live Collaboration" was recently introduced to allow simultaneous editing, the experience remains buggy. The lack of deep, automated version logging makes it less reliable for high-stakes auditing. Search, while fast, is known to return duplicate results, cluttering the discovery process.

AI: Helpjuice’s "Swifty AI" suite is a fully integrated layer, featuring a native chatbot that provides source-verified answers from the KB. It moves beyond simple keyword matching to contextual AI-powered search." However, while it’s a capable "reader" for customers, it remains siloed from the broader operational workflow; it can answer a question, but it cannot yet trigger an external action.

Hybrid Team Readiness (HTR) Score: 5.6 / 10

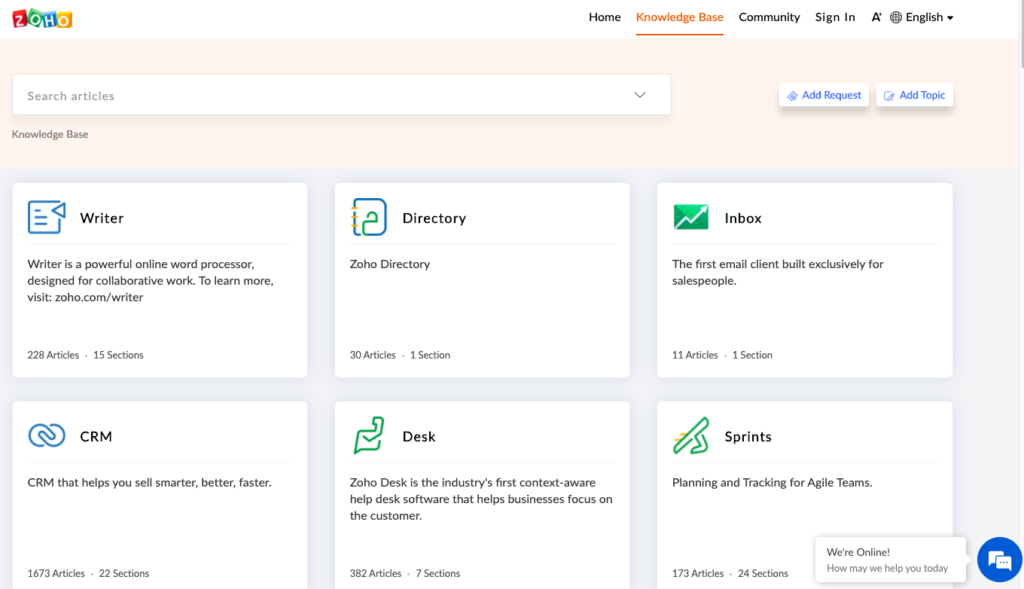

Zoho Desk: A support operations suite with an embedded knowledge base

Zoho Desk functions less as a standalone repository and more as a high-functioning gear in a massive machine of interconnected tickets, CRM records, and chat modules. If you already work in the Zoho ecosystem, your documentation will be natively tethered to live customer data and multi-channel support history.

While basic deployment is swift, the administrative interface remains a notorious maze of menus that demands a high degree of "technical patience" from beginners. Most frustrating is the search engine, which—despite the 2026 updates—remains stubbornly dependent on manual keyword optimization. If your customers don't use the exact phrases you've mapped, the system fails to bridge the semantic gap, turning discovery into a match-the-word exercise rather than a true understanding of intent.

AI: The platform has transitioned from simple "assistance" to "agency" with the introduction of Zia Agent Studio. This allows for the deployment of digital employees that can use the Knowledge Base as a "reasoning engine" to solve customer issues autonomously and even communicate via the Model Context Protocol (MCP) with third-party agents. Agentic features are naturally gated behind premium tiers. Fthor the standard user, Zia remains a "deflection-first" tool.

Hybrid Team Readiness (HTR) Score: 6.2 / 10

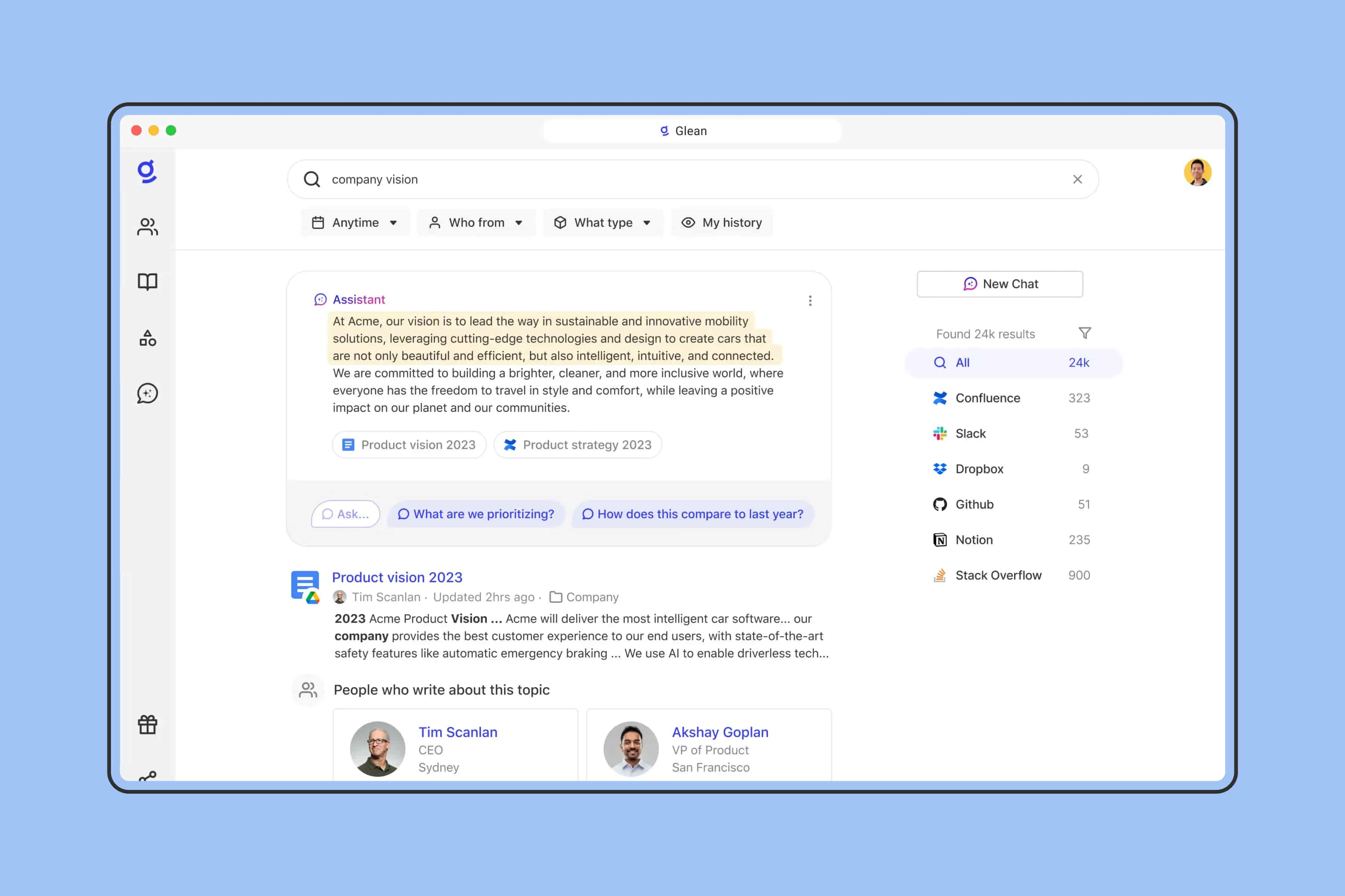

Glean: Grounded answers for internal AI systems

Glean isn't a place where you write knowledge; it is the connective tissue that finds it. It functions as a cognitive layer over your entire tech stack—Slack, Drive, Jira, Confluence—indexing the scattered fragments of corporate intelligence. Its value proposition is "Knowledge Discovery": a natural language interface that understands your role and your work graph to surface the exact document you didn't know existed. It is the ultimate search-first solution for large organizations where information is buried in the "app sprawl."

However, as an overlay, Glean inherits the "garbage in, garbage out" problem of its sources. It is entirely dependent on the quality of the data it indexes; if your team’s Confluence is a mess, Glean’s summaries will reflect that chaos. Users report occasional latency when retrieving deep archives and the typical LLM "hallucination" risk, where generated answers may confidently misinterpret a source document. Crucially, Glean is not a storage system. It is a brilliant map, but if your team stops maintaining the territory (the actual docs), the map becomes useless.

AI: Glean is built on a "Search + Synthesis" model. It doesn't just return links; it acts as a company-wide AI assistant that answers in natural language and cites sources. While it is introducing proactive "Heads Up" features to highlight relevant info before you ask, it remains an internal tool for discovery. It excels at answering "What is our policy on X?" but it lacks the native architecture to execute those policies or act as a collaborative workspace where humans and agents build knowledge together.

Hybrid Team Readiness (HTR) Score: 8.5 / 10

Notion: The Malleable Workspace

Notion is the ultimate "everything app," a platform that has successfully blurred the boundary between a documentation library and a task-management engine. For teams that prioritize aesthetic cohesion and structural flexibility, it serves as the modern standard for an internal wiki—a space where pages, databases, and project management tools coexist in a single, fluid canvas. It isn't just a place to store policies; it’s a living environment for collaborative creation.

This hyper-flexibility is also Notion’s greatest liability. Without a strict organizational architect, a Notion workspace inevitably devolves into a chaotic digital jungle where information goes to die under layers of nested pages. Performance remains a bottleneck, with larger workspaces experiencing noticeable lag as data volume scales. Furthermore, enterprise-grade governance—specifically complex, hierarchical permission modeling—still trails behind legacy giants like Confluence, making it a "fragile" truth for very large organizations.

AI: The integration of Notion AI Q&A has moved the needle from "manual search" to "conversational discovery." By allowing users to query their entire workspace in natural language, Notion has successfully turned its static blocks into a semi-active memory. However, despite its generative power, the system remains fundamentally human-centric. The AI is a brilliant "reader" and "editor," but it still lacks the autonomous agency to execute complex workflows or manage tasks without a human hand on the wheel.

Hybrid Team Readiness (HTR) Score: 7.9 / 10

Confluence (with Rovo AI): The enterprise internal knowledge base

Confluence remains the "gold standard" and the primary architectural headache for the modern enterprise. It is a hierarchical monolith designed to centralize a "Single Source of Truth" across massive organizations. Its value is built on total control: from granular permissions to rigid structural templates. For engineering teams, it isn’t just a wiki; it’s an extension of the Jira ecosystem, making documentation a native part of the production cycle.

However, the system’s weight is its own curse. Performance inevitably degrades as the database grows, and the "fussy" editor turns complex layouts into a battle with the interface. For new users, Confluence is a maze with a steep learning curve, and the cost of scaling through plugins and licenses makes it one of the most expensive solutions on the market. In an era of agile workspaces, it is often perceived as a slow-moving archive where information is easily deposited but difficult to retrieve.

The introduction of Rovo AI is Atlassian’s attempt to bridge the gap between static wiki and "knowledge in the flow." Rovo Search and Rovo Chat act as an intellectual facade capable of synthesizing answers not just from Confluence pages, but from external silos, including corporate messengers and ticketing systems. Features like Meeting Insights, which extract decisions and tasks from meeting notes, signal a move toward operational memory. Yet, it remains an overlay: the system helps a human find an answer faster, but it still lacks the deep agency required to act autonomously without constant human steering.

Hybrid Team Readiness (HTR) Score: 7.4 / 10

Slab: The Minimalist’s Filing Cabinet

Slab is built on a "structure first" philosophy, positioning itself as the high-velocity alternative for teams that find Notion too chaotic and Confluence too bureaucratic. It is an internal knowledge base stripped of distractions, designed to be a clean, lightning-fast repository for engineering and support teams who need to maintain technical documentation without the friction of complex formatting. Its strongest asset is its "smart search," which pulls results not just from its own pages but from repositories like GitHub, attempting to create a unified view of team intelligence.

The friction of Slab’s minimalist elegance is a rigid functional ceiling. Design and page layouts are strictly gated, offering almost none of the malleability found in modern workspaces. While the interface is intuitive, the permission model is simplified to a fault, making it difficult for larger organizations to manage complex access hierarchies. Furthermore, as the database grows, the search relevance begins to fray; users report being buried under clusters of similar-looking cards, requiring manual triage to find the "current" version of a document.

AI: In the landscape of 2026, Slab is a holdout. There is no built-in LLM to summarize pages, no native chat to query the workspace, and no agentic layer to handle tasks. While it can serve as a data source for external engines like Glean, the platform itself remains a passive, algorithmic archive. It is a system that relies entirely on human organization and human retrieval, lacking the neural connective tissue to move from a static library to an active memory.

Hybrid Team Readiness (HTR) Score: 3.5 / 10

Slite: The AI-Centric Pulse

Slite is the minimalist’s antidote to "wiki-bloat," designed specifically for distributed teams who prioritize async alignment over complex database architecture. It positions itself as an "AI-native knowledge base," where the traditional folder hierarchy is secondary to the immediate utility of its conversational search. By fusing documentation with real-time project context through threads and a "Catch Up" feed, it creates a high-velocity workspace that values up-to-date content over creative freedom.

Slite’s simplicity is its functional ceiling. It lacks the database-driven logic of Notion or Coda, making it ill-suited for teams that need to build sophisticated workflows directly inside their docs. While the interface is intuitive, its governance model is simplified; advanced permission structures are restricted to enterprise tiers, and the absence of a robust developer API limits its integration into custom automated pipelines. Furthermore, the accuracy of its AI synthesis is entirely tethered to the quality of the source material; if your documentation is disorganized, the system will confidently summarize the wrong context.

AI Reality: Slite was a pioneer in the "Ask" paradigm, shifting the focus from AI-driven content generation to AI-driven retrieval. The "Ask" engine provides synthesized, source-verified answers across the entire workspace, including connected silos like Google Drive. One of its most critical 2026 features is the "Document Verification" system—a proactive nudge that flags expiring content, ensuring the AI isn't grounding its answers in stale data. While it excels as a high-IQ librarian, it remains a passive observer. It can tell you what the project plan says, but it lacks the agency to execute the tasks within that plan.

Hybrid Team Readiness (HTR) Score: 6.8 / 10

Obsidian: The Sovereign Thinker

Obsidian is the gold standard for Personal Knowledge Management (PKM), built on the philosophy of a "second brain." It is an offline-first, local-markdown ecosystem designed for individuals who demand total sovereignty over their data. Its primary strength lies in its graph view and bidirectional linking, allowing a user to build an associative web of ideas rather than a rigid folder structure. It is a tool for deep synthesis, research, and long-form thinking, powered by a massive library of community-driven plugins.

The Friction: Obsidian is fundamentally anti-collaborative. It lacks native, real-time multi-user editing, making it a ghost town for teams. While a technical user can duct-tape a collaborative workflow together using Git or third-party sync services, the friction is immense. There is no out-of-the-box structure—you are the architect, and without a disciplined organizational system, the "graph" quickly turns into a tangled mess of disconnected notes. For a hybrid team trying to ship a product or answer a customer, Obsidian offers no process, no governance, and no shared operational reality.

AI Reality: Out of the box, Obsidian is a pre-AI relic by design, prioritizing privacy and local compute. While the community has built impressive plugins to link notes to LLMs via local or cloud APIs, these are fragmented "hacks" rather than a native part of the experience. It has no built-in agentic capabilities; it cannot autonomously manage tasks or act as a reasoning engine for a team. It remains a silent library for the individual genius, not a synaptic layer for a collective.

Hybrid Team Readiness (HTR) Score: 3.2 / 10

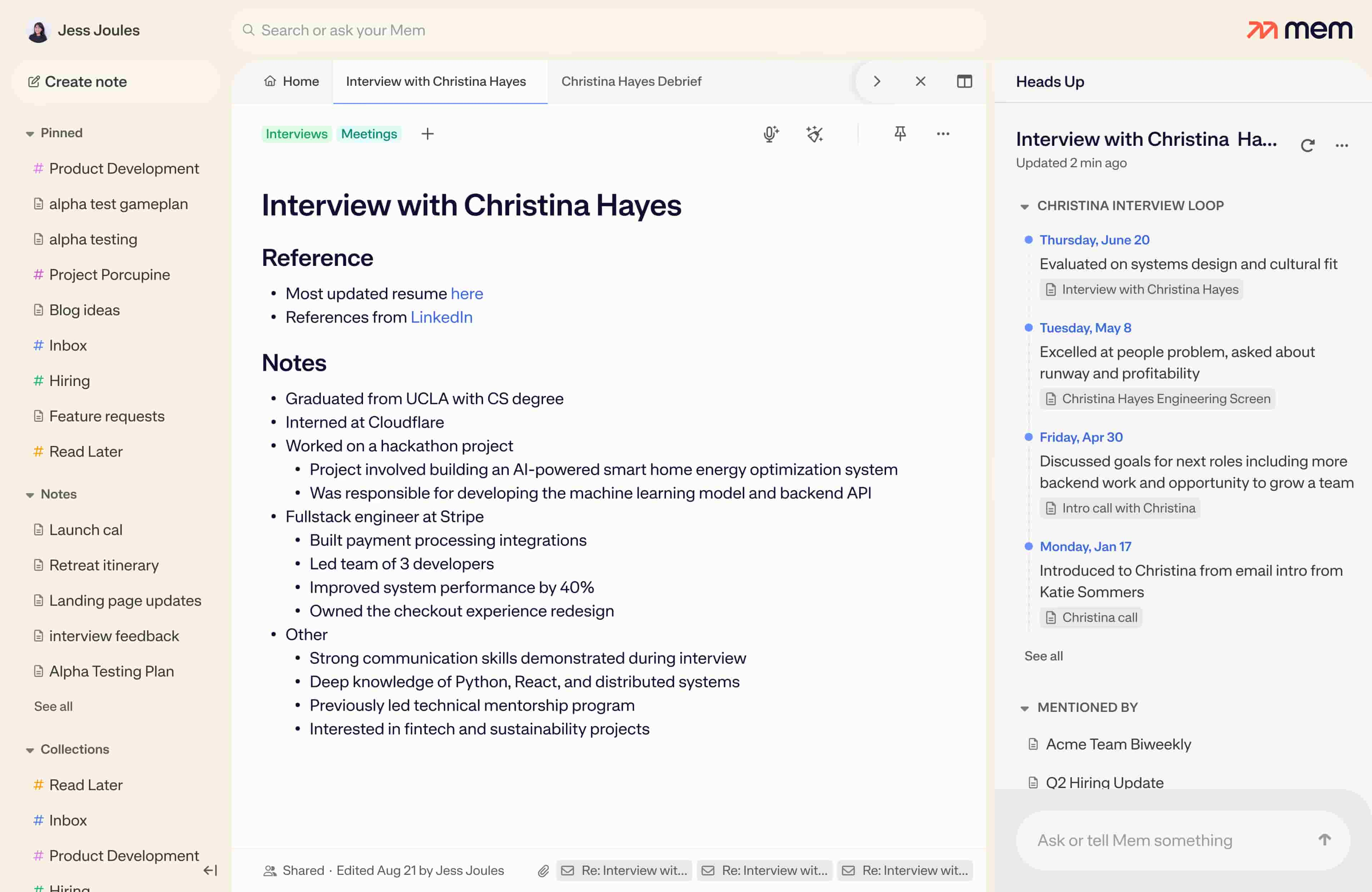

Mem: The AI Thought Partner

Mem is an experimental departure from traditional knowledge management, attempting to kill the "folder" metaphor entirely. It positions itself as a self-organizing workspace where the AI handles the cognitive load of categorizing, tagging, and resurfacing information. Instead of building a wiki, you "dump" thoughts into Mem via voice, web clipper, or text, and the system uses its "Heads Up" feature to proactively suggest relevant context as you work.

The "capture everything" philosophy often leads to digital clutter; users frequently report that the system remembers too much, making it difficult to separate vital signals from background noise. Despite the ambitious 2.0 overhaul, the platform still struggles with a reputation for buggy performance and a slow development cycle. For teams, the lack of hierarchy is a fatal flaw—it’s an excellent "shared brain" for brainstorming, but it fails miserably as a structured corporate wiki or a process-driven documentation hub.

AI: Mem is AI-native, meaning the LLM isn't just a guest; it’s the architect. The "Mem Chat" interface is deeply grounded in your specific history, and the "Spotlight" feature allows you to query your knowledge from any application on your desktop. However, while it is a master of retrieval and proactive reminding, it remains a passive cognitive assistant. It can summarize what you discussed in a meeting, but it lacks the agentic framework to turn those notes into autonomous actions or manage a complex project lifecycle.

Hybrid Team Readiness (HTR) Score: 4.8 / 10

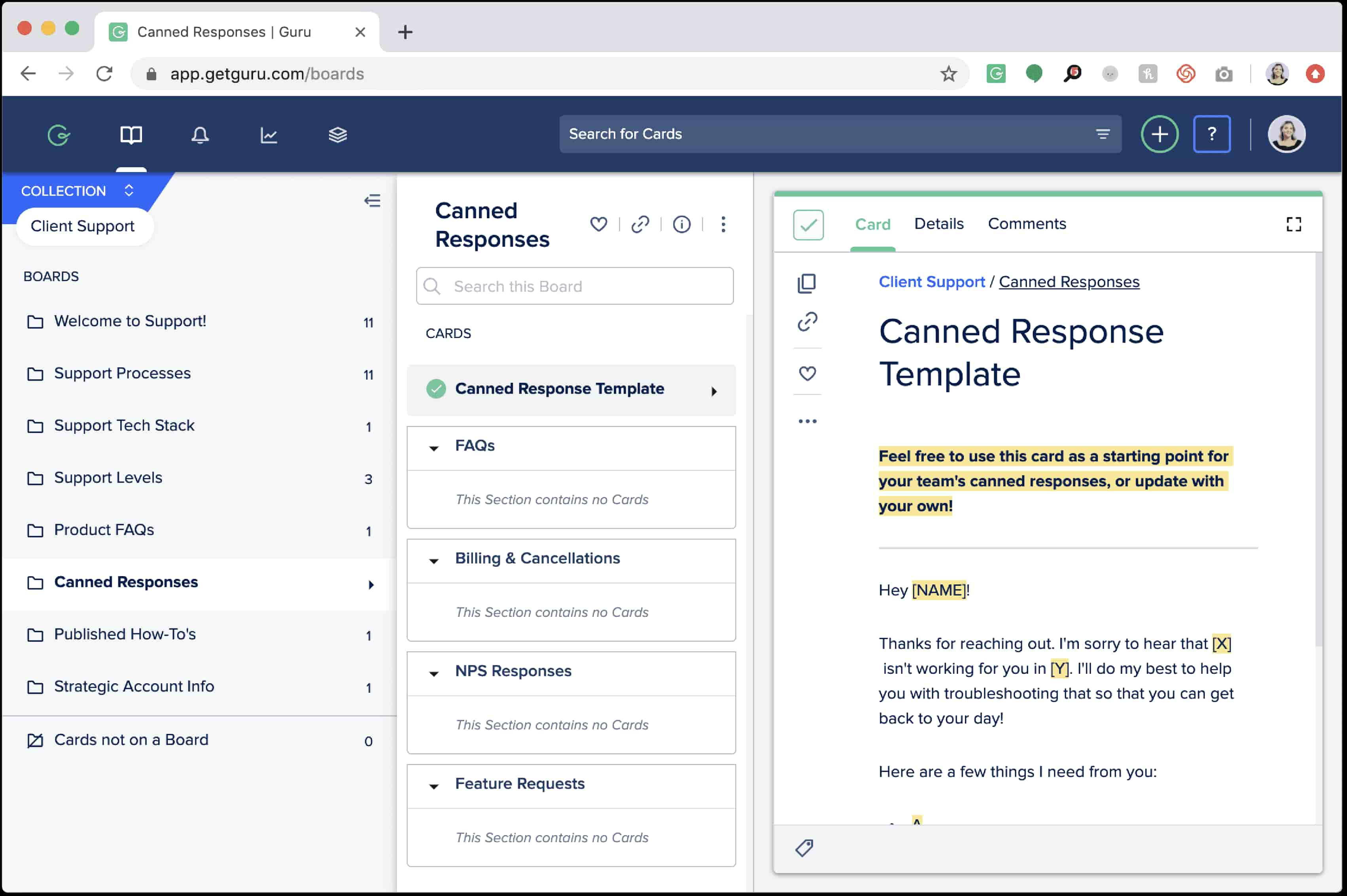

Guru: Knowledge in the Flow

Guru is a "knowledge-in-the-moment" platform that abandons the concept of a destination library in favor of a contextual layer. It delivers information directly into the tools where work happens: the browser, Slack, or MS Teams. Its defining architectural feature is a forced verification mechanism—every knowledge card has a designated expert owner and a mandatory review schedule, preventing the database from becoming a graveyard of stale instructions.

However, this approach carries a trade-off: Guru’s card-based architecture encourages extreme fragmentation as the organization scales. Users frequently struggle with a "search clutter" problem, where the system returns too many similar cards, requiring relentless classification hygiene to remain usable. Compared to traditional wikis, its design customization and analytical depth are significantly weaker, and for large enterprises, the micro-knowledge format can feel too disjointed for complex documentation.

AI: With the introduction of Guru AI, the platform has evolved into a corporate chatbot that synthesizes answers from verified cards and connected external silos like Google Drive. The system is highly effective at "question prevention," shielding subject matter experts from repetitive queries. It excels as an intelligent reference layer (the "reader"), but it currently lacks the agentic autonomy to perform active tasks or execute workflows in external services.

Hybrid Team Readiness (HTR) Score: 6.8 / 10

Main Structural Vulnerabilities of AI Knowledge Base Software

The irony of 2026 is that as our tools have become more "intelligent," our collective knowledge has often become more fragile. The industry rushed to implement "Chat over Docs" solutions, assuming that an LLM layer could fix a lack of contextual knowledge. In reality, many of them are playing Chinese whispers rather than maintaining a coherent memory of corporate decision-making.

The primary failure point is the "Chat over Junk" trap. When an AI provides a confident, polished answer based on a legacy PDF from 2021 or a discarded Slack thread, it doesn't just deliver an error—it legitimizes it.

Perhaps the most invisible rot is the lack of Decision Provenance. Traditional databases record what was decided, but they rarely capture the why—the messy context of chats and task-tracker pivots that led to the result. Even the most advanced systems often draw a blank when asked for the logic behind a particular process.

Those consciously building the next generation of knowledge bases are looking for an architecture where a Copilot (an organizational superbrain, akin to a Space Odyssey’s HAL) always remembers everything and can explain the development of logic to its human colleagues at any time—both retrospectively and in the moment. However, it must be aware of and consciously avoid Permission Leakage, a critical vulnerability where an AI’s synthesized response inadvertently "mixes" data across access levels, exposing sensitive executive logic to general staff.

Finally, there is the rising risk of AI-Slop. This is the modern phenomenon where teams squeeze more text out of generative tools for the sake of content creation. The result is a high-volume, low-signal environment that confuses the very models meant to navigate it. A promising AI environment must be able to filter this noise by understanding the actual semantic value of new pieces of context.

All these vulnerabilities lead to the most critical threat of the agentic era: The Expiration Date of Truth. Stale context in 2026 is a greater risk than a lack of information. Traditional knowledge bases treat documents as nigh immortal, leading to Stale Truths where outdated policies are treated as current law. In an agentic workflow, knowledge has a distinct shelf life; a modern system must do more than store—it must actively curate, verify, and expire.

To achieve this, a best-in-class environment must move beyond storage to proactive grounding. This requires a system that identifies when a process template no longer aligns with the team’s actual behavior in the task-tracker, flagging that knowledge as compromised. BridgeApp’s knowledge hub leads here with built-in staleness detection: if the documentation says one thing but the real-time work logs show another, the system 'feeds' agents only the verified, millisecond-accurate data required for execution.

BridgeApp: The Unified Operating Layer

For data-driven teams that demand absolute context-awareness, BridgeApp offers itself as a single, cohesive operating layer. It is the only environment where conversations, tasks, documents, code, and structured knowledge hubs can remain physically and logically connected. Whether deployed on-premises or in your private cloud for strict compliance and utilized as a high-velocity team hub, it ensures that data control and boundaries remain absolute.

BridgeApp maintains the entire flow of corporate existence on many levels—from foundational mission-critical docs and legal regulations to the granular backlogs of tasks, release notes, marketing drafts, etc. By housing this data in one continuous, synaptic flow, teams spend less time "recollecting" and more time shipping.

The transition to an agentic workforce and hybrid teams requires a foundation that matches the way you actually work. Take one week to run a pilot with BridgeApp and experience the difference of a workspace that understands its own context.